We are in a historical period of information technology where everyone is crazy about Microservices. Whoever coined the term a few years ago, clearly did not know or did not care that the concept of µServices had already been introduced in 2010 by Peter Kriens (Software Architect at the OSGi Alliance). His idea is basically that extracted from his article and which I report below, in which the term "micro" it actually makes sense.

What I am promoting is the idea of µServices, the concepts of an OSGi service as a design primitive. (cit. Peter Kriens)

Now we are in the situation that we have two terms that are pronounced the same way but they have a different meaning regarding implementation! Fortunately we can write them in a different form.

So, we'll write about µServices to refer to the small but good ones Java services, independent and cohesive, which allow you to achieve the same goals without the overload of Microservices.

To conclude this brief discussion on the terms and then move on to essentially, I recommend reading the article Software mixed with marketing: micro-services also published by Peter Kriens.

Throughout this article, I would say to be the continuation of mine intervention Liferay as Digital Experience Platform in the context of Microservices (held at Liferay Boot Camp 2020 Summer Edition), I would like to take you to evaluate the OSGi framework as a valid solution for the implementation of your architecture to Microservices.

To effectively follow the contents of the article, a minimum knowledge of the OSGi framework, so I can recommend the reading this brief presentation OSGi and Liferay 7 of 2016 held at the Italian User Group of Liferay in Bologna.

1. OSGi µServices

The OSGi services or µServices are the key concept used to build the foundation of modular code. At the lowest level OSGi affects the loading of class; each module (or bundle) has its own class loader. A bundle defines external dependencies using the directive Import-Package. Only packages exported explicitly through the Export-Package directive can be used by other packages.

This level of modularity ensures that only APIs (Java interfaces) are shared between bundles and implementation classes are strictly hidden.

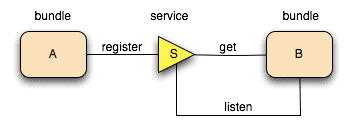

Other bundles can then use the service by searching for it from the interface of the service. The bundle can use the service using its interface, but doesn't have to know which implementation is being used or who has provided the implementation. In OSGi this problem is solved by the Service Registry. The service registry is part of the framework OSGi. A bundle can register a service in this registry. This will register an instance of an implementation class in the registry with its interface.

Briefly summarizing, we could say that:

- µServices or services are made up of a series of interfaces and classes Java registered within the Service Registry;

- a component can be published as a service;

- a component may request a service;

- the services are registered by Bundle Activator.

The figure below shows the Service Registry where each bundle can register own services but also where each bundle can find the services to consume; we could say that it is a sort of "folder".

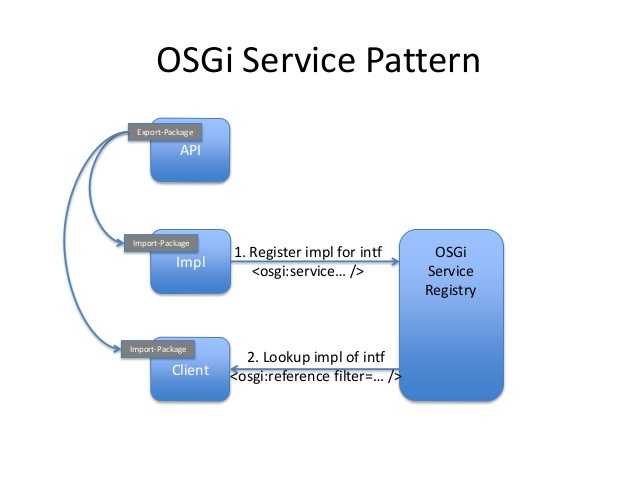

The figure below shows the classic OSGi Service Pattern that the modular applications follow. A typical application therefore consists of:

- a bundle that defines all the APIs (Java interfaces) and the latter are exported "outward" via the directive Export-Package;

- a bundle that implements the interfaces defined by the API bundle. This uses the directive Import-Package. The services implemented are registered in the Service Registry;

- a Client (or Consumer) bundle that consumes the services defined by the API. This uses the Import-Package directive. References to services that will be consumed are made available thanks to the interaction with the Service Registry.

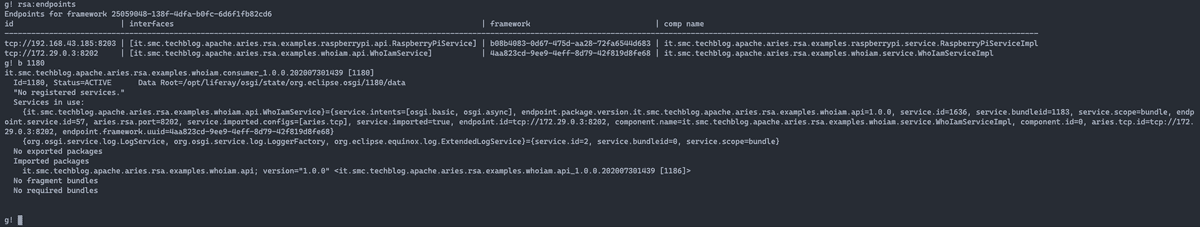

The figure below shows an example of a modular OSGi application in running inside an Apache Karaf OSGi container where the separation into the three bundles: API, Service (impl) and Consumer (client).

Source Code 1 instead shows the MANIFEST.MF contained within the bundle of the API where you can see the Export-Package directive which expresses the package that will be exported (therefore visible externally) and the version specific.

Manifest-Version: 1.0

Bnd-LastModified: 1595983877560

Bundle-Description: Aries Remote Service Admin Examples - Raspberry Pi API

Bundle-ManifestVersion: 2

Bundle-Name: Aries Remote Service Admin Examples - Raspberry Pi API

Bundle-SymbolicName: it.smc.techblog.apache.aries.rsa.examples.raspberrypi.api

Bundle-Version: 1.0.0.202007290051

Created-By: 1.8.0_181 (Oracle Corporation)

Export-Package: it.smc.techblog.apache.aries.rsa.examples.raspberrypi.api;version="1.0.0"

Require-Capability: osgi.ee;filter:="(&(osgi.ee=JavaSE)(version=1.8))"

Tool: Bnd-4.2.0.201903051501The following three Java sources show respectively:

- the definition of the interface (API bundle);

- the implementation of the service whose interface has been defined by the bundle API (Impl or Service bundle);

- use or consumption of the service (Client or Consumer bundle).

package it.smc.techblog.apache.aries.rsa.examples.whoiam.api;

/**

* @author Antonio Musarra

*/

public interface WhoIamService {

public String whoiam();

}package it.smc.techblog.apache.aries.rsa.examples.whoiam.service;

import it.smc.techblog.apache.aries.rsa.examples.whoiam.api.WhoIamService;

...

/**

* @author Antonio Musarra

*/

@Component(immediate=true)

public class WhoIamServiceImpl implements WhoIamService {

@Override

public String whoiam() {

Bundle bundle = _bc.getBundle();

String hostAddress = "NA";

try {

hostAddress = InetAddress.getLocalHost().getHostAddress();

}

catch (UnknownHostException uhe) {

_log.log(_log.LOG_ERROR, uhe.getMessage());

}

String response =

"Who I am : {Bundle Id: %s, Bundle Name: %s, Bundle Version: %s, Framework Id: %s, HostAddress: %s}";

return String.format(

response, bundle.getBundleId(), bundle.getHeaders().get(

Constants.BUNDLE_NAME), bundle.getVersion(),

_bc.getProperty(Constants.FRAMEWORK_UUID), hostAddress

);

}

@Activate

protected void activate(BundleContext bundleContext) {

_bc = bundleContext;

}

private BundleContext _bc;

@Reference

private LogService _log;

}package it.smc.techblog.apache.aries.rsa.examples.whoiam.consumer;

import it.smc.techblog.apache.aries.rsa.examples.whoiam.api.WhoIamService;

...

/**

* @author Antonio Musarra

*/

@Component(immediate=true)

public class WhoIamConsumer {

WhoIamService _whoIamService;

@Activate

public void activate() {

System.out.println("Sending to Who I am service");

System.out.println(_whoIamService.whoiam());

}

@Reference

public void setWhoIamService(WhoIamService whoIamService) {

this._whoIamService = whoIamService;

}

}

The µServices that we have seen, in a very synthetic way and I would say fast too, are in-VM, that is, the whole process works within a single JVM with overhead practically close to zero. No proxy is required, so eventually a call to service it's just a direct call to the method. In this model, a good practice forces the implemented service to do only one thing, in this way the services are easy to replace and easy to reuse.

Now it's time for the big question. It is possible to distribute the µServices on multiple OSGi containers keeping the same pattern (see Figure 2)? Of course! The how we will see later.

2. Microservices from the OSGi perspective

A different definition of Microservices can be found, but they all lead back to the concept of an architectural style that favors the development of systems loose-coupling where therefore the components we have a low level of coupling, are distributable in independently, are able to communicate through lightweight protocols and are independently testable and scalable.

This implies that Microservices embrace modularity, in this sense OSGi is the framework oriented from birth to µServices in VM. To be more precise, the table below shows how the different concepts expressed by Microservices are associated with the OSGi world.

| Capability | OSGi functionality enabling |

|---|---|

| Configuration Management | OSGi Config Admin defined in the OSGi Compendium specification |

| Service Discovery | OSGi Service Registry defined in the OSGi Core specification |

| Dynamic Routing | Dynamic routing between services (in potentially different modules) can be established by OSGi filters that allow recovery of a service reference using a syntax similar to LDAP (RFC-4515) based on the properties of the service |

| API Interface | The OSGi Remote Services specification defines a mechanism for exposing OSGi services to the outside world (effectively providing a mechanism for defining a module public API) |

| Security | Some features (such as permission checking) are provided by Permission Admin and Conditional Permission Admin defined by the OSGi specification. Additional security features are provided by other specifications such as User Admin Service |

| Centralized Logging | It can be obtained via the OSGi Log Service specification |

| Packaging | OSGi bundles are distributed as JAR artifacts, so ready too for deployment in environments that require the use of container |

| Deployment | Either from the OSGi console, from the file system (hot deploy) or via one dedicated tool / API (specific for OSGi runtime) |

Moving beyond the confines of the OSGi environment, challenges come into play interesting that obviously include considerations about the balance of the load balancing, auto-scaling, resilience, fault tolerance and back-pressure to name some.

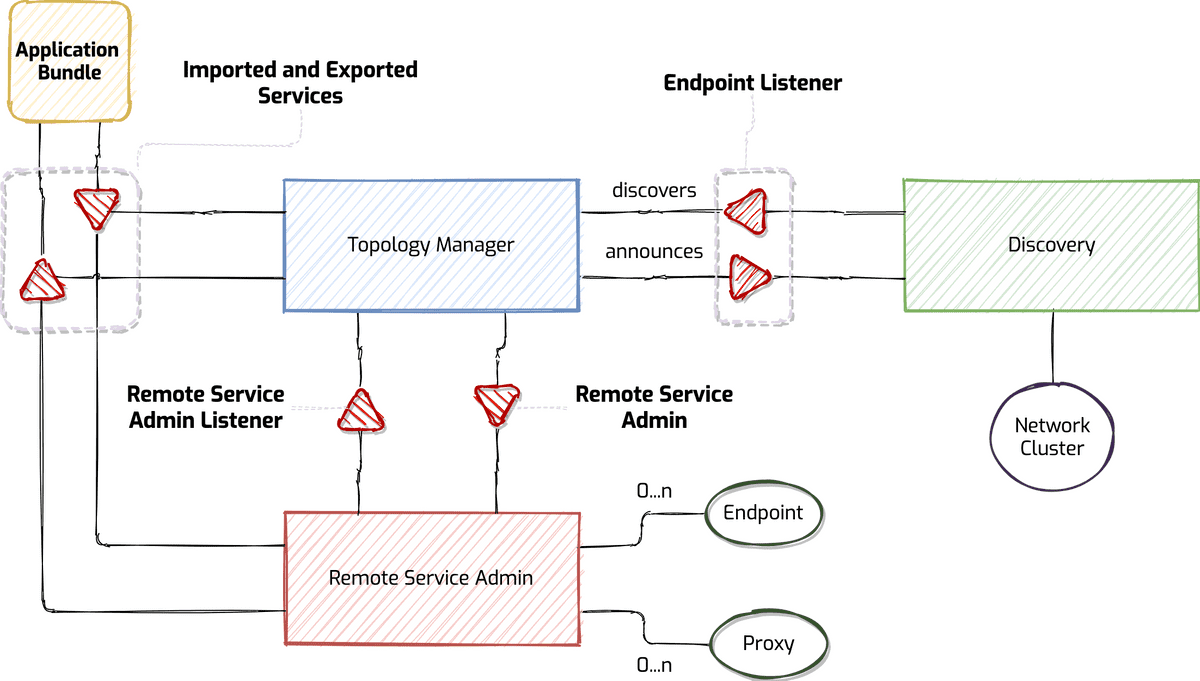

All these aspects can be enabled via the Remote Services and Remote Service Admin specifications defined by the OSGi Compendium specification.

In particular, the Remote Service Admin specification defines an agent of management plugable called Topology Manager able to satisfy the several concepts mentioned that characterize the interaction between OSGi remote service (actually the communication channel between the different OSGi containers which form the set of Microservices).

The best known frameworks that provide an implementation of the two OSGi specifications concerning Remote Services, are:

- Apache CXF Distributed OSGi

which allows you to:

- Offer and consume SOAP and REST-based services. Furthermore, the use of the Declarative Services it is the simplest way to interact with these services;

- communicate transparently between OSGi containers.

- Apache Aries RSA (Remote Service Admin);

- Eclipse Communication Framework (ECF)

Throughout this article, we will refer to the Apache implementation Aries RSA (Remote Service Admin), because in my opinion it is the simplest and flexible to use, as well as the most widespread.

After seeing what are the specifications that enable us to distribute the bundles OSGi and therefore the µServices to go outside the boundaries of their container, the time has come to present a possible scenario taking care of the practical aspect.

3. An example scenario of Remote µServices

Let's assume a scenario where we have four different OSGi containers, of which two exclusively offer services, thus assuming the role of service provider, one it offers services and also assumes the role of client or comumer and finally, one last that has exclusively the role of consumer. On two of the three OSGi containers that will act as service provider, we will install the same service.

So if we want to make the shopping list, we have to develop two classics OSGi-style projects.

- One that contains three bundles: API, Service and Consumer for the service that we will call Who I am.

- One that contains three bundles: API, Service and Consumer for the service that we will call Raspberry Pi.

The table below shows the details by service and which will be the OSGi bundle to be developed.

| Servizio | Bundle Name | Description |

|---|---|---|

| Who I am Service | WhoIam API | Bundle that defines the Who I am service contract via the Java interface. The bundle exports the interface package (see Source Code 2). |

| WhoIam Service | Bundle that implements the Who I am service interface. The bundle imports the package interface (see Source Code 3). | |

| WhoIam Consumer | Bundle that consumes the Who I am service. The reference to the service is obtained in such a way transparent from the Service Registry. The bundle imports the interface package (see Source Code 4). | |

| Raspberry Pi Service | Raspberry Pi API | Bundle that defines the Raspberry Pi service contract via the Java interface. The bundle exports the interface package. |

| Raspberry Pi Service | Bundle that implements the Raspberry Pi service interface. The bundle imports the package interface. | |

| Raspberry Pi Consumer | Bundle that consumes the Raspberry Pi service. The reference to the service is obtained in such a way transparent from the Service Registry. The bundle imports the interface package. |

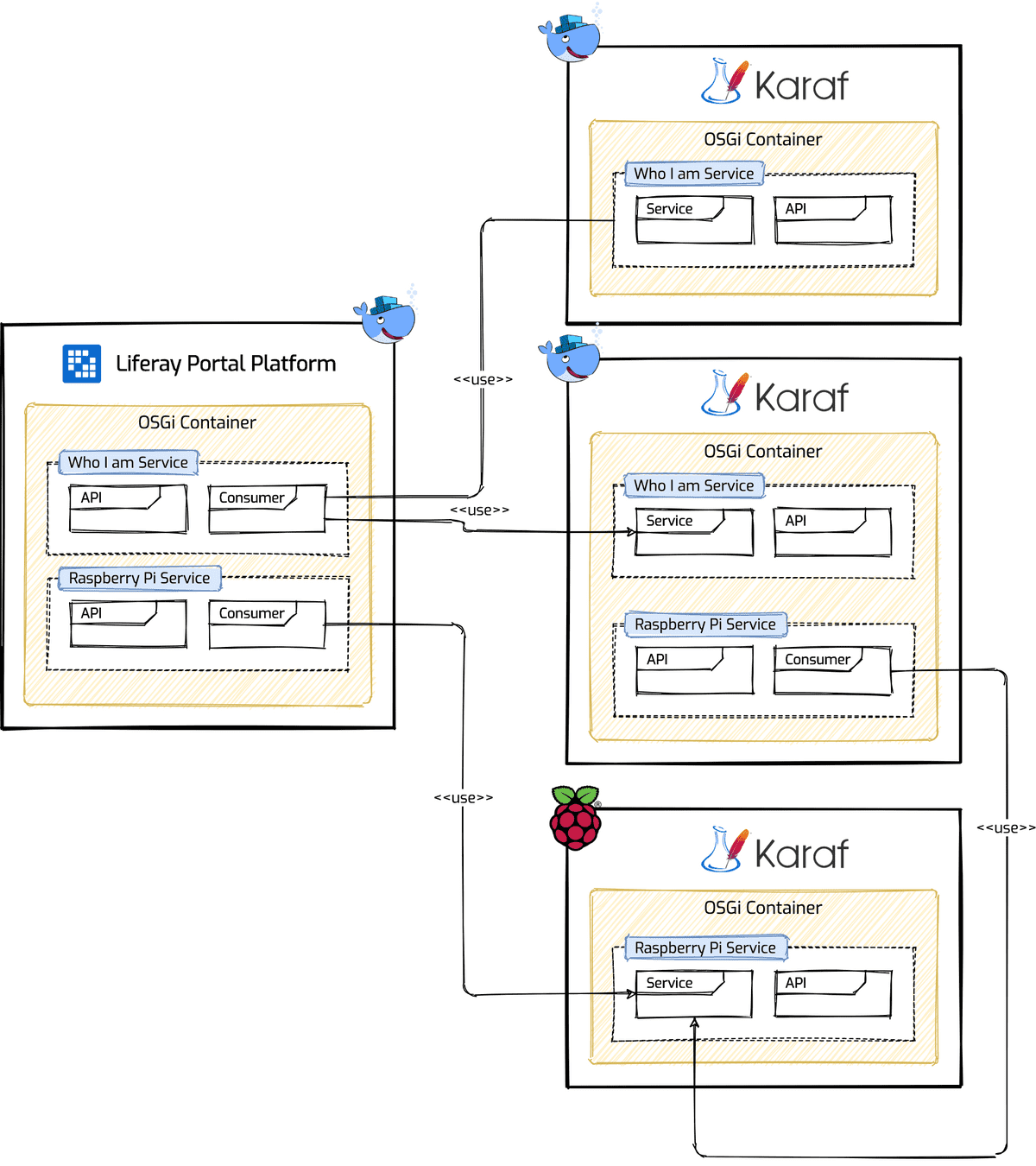

The diagram below shows the scenario we are going to implement using an architecture distributed to µServices while remaining in our OSGi context. For those who are now used to developing using the OSGi framework, they will appreciate the fact that in the end nothing will change in the way you write code.

From the diagram it is possible to see that we have four OSGi available Containers of which, one is inside an instance of Liferay Portal Platform and the rest of the three are all instances of Apache Karaf. In order to make the more interesting things, I deliberately planned for the scenario the introduction of Liferay and an Apache Karaf instance running on the Raspberry Pi.

The distribution of the bundles (indicated in Table 2) on the four OSGi containers. On Liferay Portal we have the bundles that will allow you to consume the Who I am and Raspberry Pi service. On two containers Apache Karaf we have the bundles that will allow us to provide the Who I am service , and on one of the two containers the bundles that will allow you to consume the Raspberry Pi service. On the third and final Apache Karaf container we have the bundles which will provide the Raspberry Pi service.

Now that we have a clear idea of what our scenario will be, we must understand how to enable the OSGi containers we have available to be able to communicate with each other.

4. Apache Aries RSA (Remote Service Admin)

The specific OSGi R7 Compendium has two sections related to OSGi Remote Services: 100 Remote Services (RS) e 122 Remote Service Admin Service Specification (RSA). These specs, however, are primarily intended for who implements RS/RSA rather than developers.

Throughout the article I have always referred to the R7 release of the OSGi specifications, I would however like to mention that the specifications for OSGi Remote Services were introduced in OSGi Service Platform Enterprise Specification, Release 4, Version 4.2.

To recap. OSGi services are simply Java objects that expose a number of Java interfaces. Instances are dynamically registered in based on the interface name along with the properties via the Service Registry. As described in the specifications, OSGi services feature numerous advantages, including support for dynamics, safety, a clear cut separation between service contract and implementation, Semantic Versioning and other. There are three parts to an OSGi service instance:

- one or more service interfaces (java interface);

- an implementation of service interfaces;

- a consumer of the service (via the service interface).

OSGi Remote Services it is simply extending the Service Registry to allow access to OSGi services from out of process ... or for remote access.

How does it differ from non-OSGi remoting like HTTP/REST? First In place, the OSGi remote services have access to all properties of the OSGi services ... e.g. dynamics, security, versioning, separation of implementation contracts, etc., as well as full support for services declarative or Declarative Services and other injection frameworks.

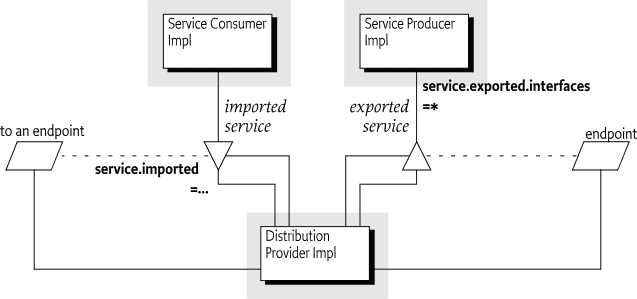

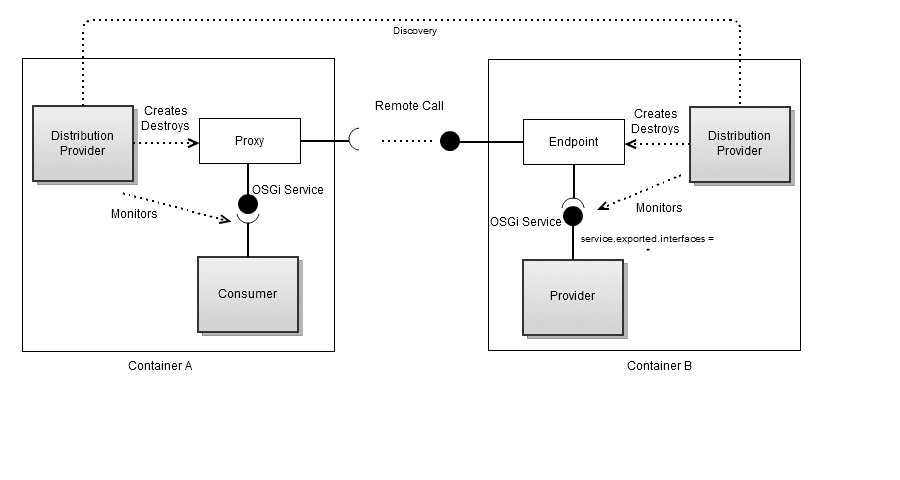

A Distribution Provider can take advantage of loose-coupling between bundles to export a registered service by creating an endpoint. Conversely, the Distribution Provider can create a proxy that accesses an endpoint and then register this proxy as an imported service. A framework can contain simultaneously several Distribution Providers, each of which imports and exports independently.

An endpoint is a communication access mechanism to a service in another framework, a (web) service, another process or queue, etc., which requires a communications protocol. The mapping between services ed endpoints as well as their communication characteristics is called topology.

The project Apache Aries Remote Service Admin (RSA) allows you to transparently use OSGi services for communication remote. OSGi services can be marked for export adding a service property service.exported.interfaces=*. Various other properties can be used to customize the way the service must be exposed.

The figure below shows the Apache Aries Remote Service Admin architecture (RSA) that if you notice shows the components described by the OSGi Remote specifications Service (RS and RSA). The Endpoint describes a service using the service interfaces, URL and any other properties needed for successfully import the remote service. The Endpoint Listener is a service that must be notified when there are changes on endpoints remote (described by OSGi filters) are registered or de-registered.

The Topology Manager by default exposes all services premises that are appropriately marked for export and import all the affected services with the corresponding remote endpoints. The Topology Manager can also add properties for the purpose of changing the way the services are exposed. For the services to be exposed call the Remote Service Admin, then the latter performs the actual export, subsequently notify endpoint listeners of the new endpoint. The Topology Manager listens for service requests from consumers and creates endpoints listener.

The Topology Manager is the best place to implement governance rules at the system level. Some examples of what can be done:

- secure remote endpoints with SSL/TLS, authentication services and audit;

- export of OSGi services with annotations for JAX-WS e JAX-RS although not specially marked for export.

Per il ruolo del Topology Manager, questo non implementa direttamente queste caratteristiche ma crea tutte le chiamate necessarie a un Remote Service Admin.

The Remote Service Admin is called by the Topology Manager to expose the local services as remote endpoints and create local proxy services as clients for remote endpoints. Apache RSA has a SPI (Service Provider Interface), the Distribution Provider which allows you to easily create new mechanisms transport and serialization for OSGi services to be available beyond outside the container. The diagram below shows the role of the Distribution Provider.

The current providers supported by Apache Aries are:

- Apache CXF Distributed OSGi

- uses Apache CXF for transport;

- service endpoints can also be consumed by software != da Java thanks to the support of the JAX-WS and JAX-WS standards.

- TCP

- Java serialization on TCP (one port per service);

- very few addictions;

- simple to implement your own transport mechanism.

- Fastbin

- Java serialization Protocol Buffers (or Protobuf) optimized over TCP via NIO (Non-blocking I / O);

- multiplexing on a single port;

- transparently manages InputStreams and OutStreams in remote services;

- Synchronous and asynchronous calls supported.

Discovery uses endpoint listeners to listen to endpoints local and publish them for other OSGi containers. Listen to the endpoints too remote and notify endpoint listeners of their presence. The current implementations supported by Apache RSA are:

- Local Discovery through the use of XML descriptors;

- Discovery based by Apache Zookeeper.

For the implementation of our scenario (shown in Figure 4) we will use the Distribution Provider TCP and Apache ZooKeeper for Discovery. Here we are!

5. Implementation of the Remote µServices scenario

I realize some of you have been hard to get here, but there we are, the very, very practical part has arrived.

From the diagram shown in Figure 4 some of you will have imagined the scenario of deployment, yes: container Docker e SBC (Single Board Computer) Raspberry Pi.

5.1 Deployment environment configuration

In order to encourage all of you to replicate the scenario in Figure 4, there isn't nothing better than putting up the necessary services using docker compose. Via the docker compose we will configure the following services.

- Two Apache Karaf services (version 4.2.7)

- A Liferay Portal Community Edition service (version 7.3 GA4)

- An Apache ZooKeeper service (version 3.6.1)

The contents of the docker-compose.yaml file are shown below the configuration details of the above services are defined. All the images of the defined services are available on the official Docker accounts Hub of their respective owners.

# Docker Compose file for OSGi µServices demo

version: "3.3"

services:

zookeeper-instance:

image: zookeeper:3.6.1

restart: always

hostname: zookeeper-instance

ports:

- 2181:2181

- 9080:8080

healthcheck:

test:

[

"CMD-SHELL",

"wget http://zookeeper-instance:8080/commands && echo 'OK'",

]

interval: 5s

timeout: 2s

retries: 3

karaf-instance-1:

image: apache/karaf:4.2.7

command:

[

"sh",

"-c",

"cp /opt/apache-karaf/deploy/*.cfg /opt/apache-karaf/etc/; karaf run

clean",

]

volumes:

- ./karaf-instance-1/deploy:/opt/apache-karaf/deploy

ports:

- 8101-8105:8101

depends_on:

- zookeeper-instance

karaf-instance-2:

image: apache/karaf:4.2.7

command:

[

"sh",

"-c",

"cp /opt/apache-karaf/deploy/*.cfg /opt/apache-karaf/etc/; karaf run

clean",

]

volumes:

- ./karaf-instance-1/deploy:/opt/apache-karaf/deploy

ports:

- 8106-8110:8101

depends_on:

- zookeeper-instance

liferay-instance-1:

image: liferay/portal:7.3.3-ga4

volumes:

- ./liferay-instance-1/deploy:/etc/liferay/mount/deploy

- ./liferay-instance-1/files:/etc/liferay/mount/files

ports:

- 6080:8080

- 21311:11311

- 9201:9201

depends_on:

- zookeeper-instanceThe contents of the docker-compose.yml should be clear enough. A single note what i would like to do is about the command of Apache Karaf service that I wanted to override (see CMD) for two reasons.

- Update the configuration of the Maven repositories. In particular the exchange protocol from http to https, this is to avoid the HTTP/501 error in case update and/or installation of new Apache Karaf Feature.

- Update the Apache Karaf configuration to install le by default features of Apache Aries RSA. This way we avoid having to do it later from the console.

- Start Apache Karaf with the clean parameter Cleaning the Karaf state.

Console 1 shows the structure of the project that allows you to pull up i services via docker compose. Each service has its own directory inside which then contains further directories (deployment and configuration) and file.

The deploy directory referencing the Apache Karaf service contains the configuration files for Apache ZooKeeper, Apache Karaf and the .jar files bundles implementing the Who I am service (see Table 2).

The deploy directory which refers to the Liferay Portal service is the bundle hot-deploy directory. They will eventually go to this directory placed the JAR files of the API and Consumer bundles of the Who I am e Raspberry Pi.

The files/osgi/configs directory which references the Liferay Portal service, contains the configuration file for Apache ZooKeeper.

The files/osgi/modules directory which refers to the Liferay Portal service, contains all the bundles needed to install on the OSGi container of Liferay support for Apache Aries RSA. Furthermore, within the same directory, there are the JARs of the API and Consumer bundles of Who I am and Raspberry Pi services.

├── docker-compose.yml

├── karaf-instance-1

│ └── deploy

├── karaf-instance-2

│ └── deploy

└── liferay-instance-1

├── deploy

└── files

└── osgi

├── configs

└── modules5.2 Apache Aries RSA setup for OSGi containers

Before we can remotely expose in our µServices, it needs to be Apache Aries RSA installed on each of the OSGi containers, including Liferay.

To do this, I preferred to act directly at the docker level he composed, in this way, once the services are up, everything will be ready for enjoy remote µServices in action.

Of course you can install Apache Aries RSA later, but for as much as possible we always try to make the most of our tools arrangement.

Apache Karaf side, installing Apache Aries RSA is really simple, the following configuration files are enough, already included in the docker project compose (see karaf-instance-1/deploy directory).

- org.apache.karaf.features.cfg. Using this configuration file is rsa-features address was added and indicated which bundle install in the start-up phase of Apache Karaf.

- org.apache.aries.rsa.discovery.zookeeper.cfg. Using this file was configured the address and port of the Discovery service, which in this case is represented by Apache ZooKeeper.

Below is part of the contents of the configuration file of the Apacke Karaf features of our interest. Specifically do refer to line 9 and from line 34 to line 36. Thus Apache Karaf is instructed to install the bundles upon departure SCR (Service Component Runtime), aries-rsa-provider-tcp and aries-rsa-discovery-zookeeper.

#

# Comma separated list of features repositories to register by default

#

featuresRepositories = \

mvn:org.apache.karaf.features/standard/4.2.7/xml/features, \

mvn:org.apache.karaf.features/spring/4.2.7/xml/features, \

mvn:org.apache.karaf.features/enterprise/4.2.7/xml/features, \

mvn:org.apache.karaf.features/framework/4.2.7/xml/features, \

mvn:org.apache.aries.rsa/rsa-features/1.14.0/xml/features

#

# Defines if the boot features are started in asynchronous mode (in a dedicated thread)

#

featuresBoot = \

instance/4.2.7, \

package/4.2.7, \

log/4.2.7, \

ssh/4.2.7, \

framework/4.2.7, \

system/4.2.7, \

eventadmin/4.2.7, \

feature/4.2.7, \

shell/4.2.7, \

management/4.2.7, \

service/4.2.7, \

jaas/4.2.7, \

deployer/4.2.7, \

diagnostic/4.2.7, \

wrap/2.6.1, \

bundle/4.2.7, \

config/4.2.7, \

kar/4.2.7, \

scr/4.2.7, \

aries-rsa-provider-tcp/1.14.0, \

aries-rsa-discovery-zookeeper/1.14.0

The hostname and port are set through the two properties shown below of the Apache ZooKeeper service (see service definition zookeeper-instance on the docker compose file).

zookeeper.host=zookeeper-instance

zookeeper.port=2181On the Liferay Portal side, installing Apache Aries RSA support is a little bit more boring. What you need to do is download the Apache Aries RSA bundles and Apache ZooKeeper from the Maven repository and the Apache ZooKeeper site, then put them in the hot-deploy directory ($LIFERAY_HOME/deploy) for installation.

The org.apache.aries.rsa.discovery.zookeeper.cfg configuration file for the Discovery service should instead be placed inside the directory $LIFERAY_HOME/osgi/configs.

Thanks to docker compose you won't have to do any of that, services they will start already configured. The one shown below is the structure of the docker compose project, which allows you to pull up all OSGi containers already configured and ready to use, with the projects' OSGi bundles also installed Who I am and Raspberry Pi distributed according to the diagram in Figure 4.

├── docker-compose.yml

├── karaf-instance-1

│ └── deploy

│ ├── it.smc.techblog.apache.aries.rsa.examples.whoiam.api-1.0.0-SNAPSHOT.jar

│ ├── it.smc.techblog.apache.aries.rsa.examples.whoiam.service-1.0.0-SNAPSHOT.jar

│ ├── org.apache.aries.rsa.discovery.zookeeper.cfg

│ ├── org.apache.karaf.features.cfg

│ └── org.ops4j.pax.url.mvn.cfg

├── karaf-instance-2

│ └── deploy

│ ├── it.smc.techblog.apache.aries.rsa.examples.whoiam.api-1.0.0-SNAPSHOT.jar

│ ├── it.smc.techblog.apache.aries.rsa.examples.whoiam.service-1.0.0-SNAPSHOT.jar

│ ├── it.smc.techblog.apache.aries.rsa.examples.raspberrypi.api-1.0.0-SNAPSHOT.jar

│ ├── it.smc.techblog.apache.aries.rsa.examples.raspberrypi.consumer-1.0.0-SNAPSHOT.jar

│ ├── org.apache.aries.rsa.discovery.zookeeper.cfg

│ ├── org.apache.karaf.features.cfg

│ └── org.ops4j.pax.url.mvn.cfg

└── liferay-instance-1

├── deploy

└── files

└── osgi

├── configs

│ └── org.apache.aries.rsa.discovery.zookeeper.cfg

└── modules

├── it.smc.techblog.apache.aries.rsa.examples.raspberrypi.api-1.0.0-SNAPSHOT.jar

├── it.smc.techblog.apache.aries.rsa.examples.raspberrypi.consumer-1.0.0-SNAPSHOT.jar

├── it.smc.techblog.apache.aries.rsa.examples.whoiam.api-1.0.0-SNAPSHOT.jar

├── it.smc.techblog.apache.aries.rsa.examples.whoiam.consumer-1.0.0-SNAPSHOT.jar

├── jansi-1.18.jar

├── org.apache.aries.rsa.core-1.14.0.jar

├── org.apache.aries.rsa.discovery.command-1.14.0.jar

├── org.apache.aries.rsa.discovery.config-1.14.0.jar

├── org.apache.aries.rsa.discovery.local-1.14.0.jar

├── org.apache.aries.rsa.discovery.zookeeper-1.14.0.jar

├── org.apache.aries.rsa.eapub-1.14.0.jar

├── org.apache.aries.rsa.examples.echotcp.api-1.14.0.jar

├── org.apache.aries.rsa.examples.echotcp.consumer-1.14.0.jar

├── org.apache.aries.rsa.provider.tcp-1.14.0.jar

├── org.apache.aries.rsa.spi-1.14.0.jar

├── org.apache.aries.rsa.topology-manager-1.14.0.jar

├── org.osgi.service.remoteserviceadmin-1.1.0.jar

└── zookeeper-3.4.14.jarAs for the OSGi Apache Karaf container for the service Raspberry Pi, you should have a Raspberry Pi (at least 3 Model B+), install Apache Karaf and follow the configuration tasks shown in previously. The Apache Karaf installation and configuration activity on Raspberry Pi is really simple; I recommend reading the official guide.

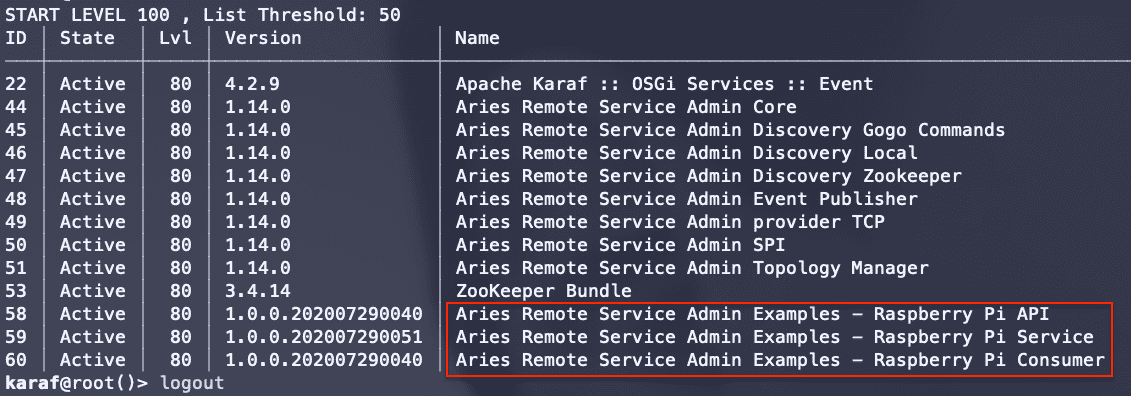

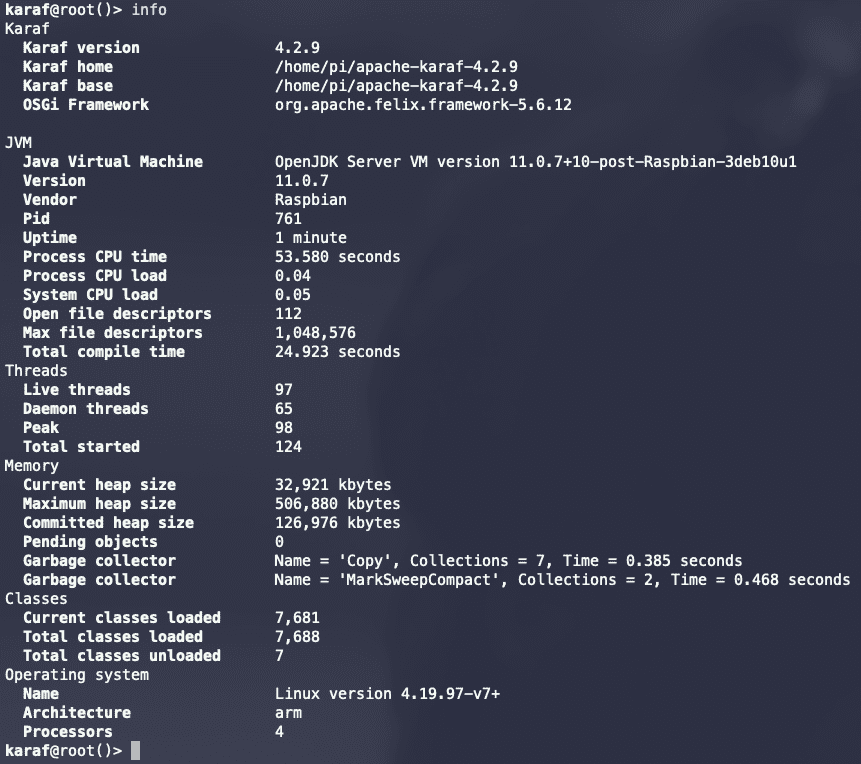

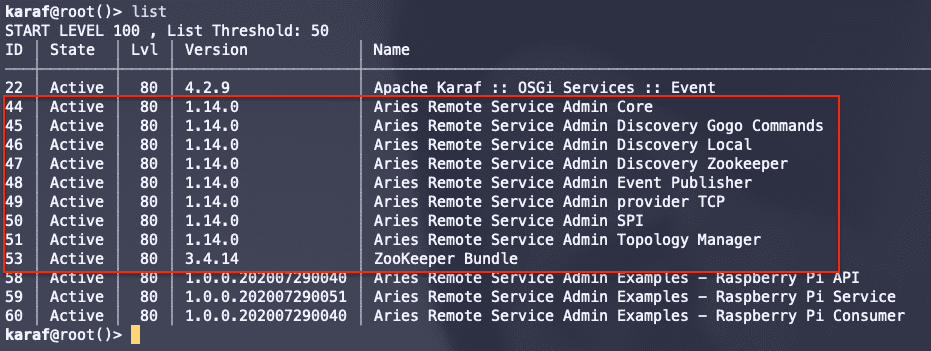

The two figures below show Apache Karaf running on my Raspberry Pi 3 Model B+ and the Apache Aries RSA and Apache ZooKeeper bundle list.

5.3 Start-up of the solution via Docker Compose

So far I think we have seen a lot of beautiful interesting things. Now it's time to start our solution via docker compose. The steps to do are very few and simple (as shown in Console 3).

Before starting the services and avoiding "strange errors", you should make sure that the resources dedicated to Docker are adequate. For these services I recommend dedicating at least 2 CPUs and 6 Gbytes of memory.

As for the Docker version, the minimum required is 18. Personally my environment is Docker Desktop with Engine version 19.03.12 and Compose version 1.26.2.

$ git clone https://github.com/smclab/docker-osgi-remote-services-example.git

$ cd docker-osgi-remote-services-example

$ docker-compose upThe first time I recommend running the docker compose not in detached mode, so as to better follow the start-up of the whole solution. For most of you, the first start-up will take several minutes, spent downloading the images from Docker Hub.

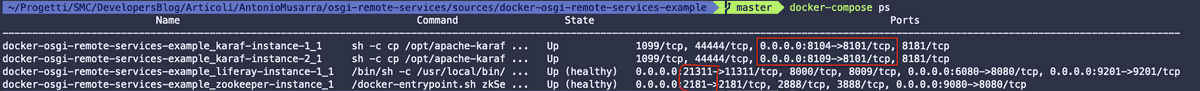

To make sure the solution came up without any problems, we could

use the docker-compose ps command and we should get a result like

the one shown in Figure 10 below. In this case, the output of the command

informs that everyone in the container is up and running.

The figure below highlights in particular the TCP ports of the administration of OSGi containers, both Liferay and Apache Karaf. It is highlighted also the exported TCP port for the Apache ZooKeeper service.

The TCP port for the Liferay Gogo Shell is 21311 for the respective instances of Apache Karaf the TCP ports are: 8104 and 8109.

Port 2181 exported for the Apache ZooKeeper service is what it will be uses from OSGi containers to log information about its services.

From the Docker Compose file (see Source Code 5), the exported administration ports for the two instances of Apache Karaf, they are set to base range, so it's not made sure that the port number is always the same, it could change at each start of the service.

By clicking on Console Session 1, you can see the terminal session it does reference to the commands shown in Console 3 for the start-up of Docker Compose.

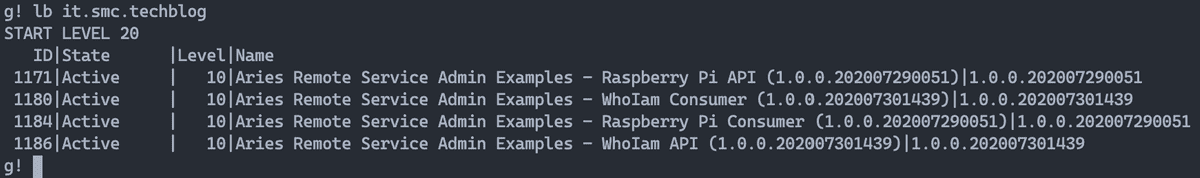

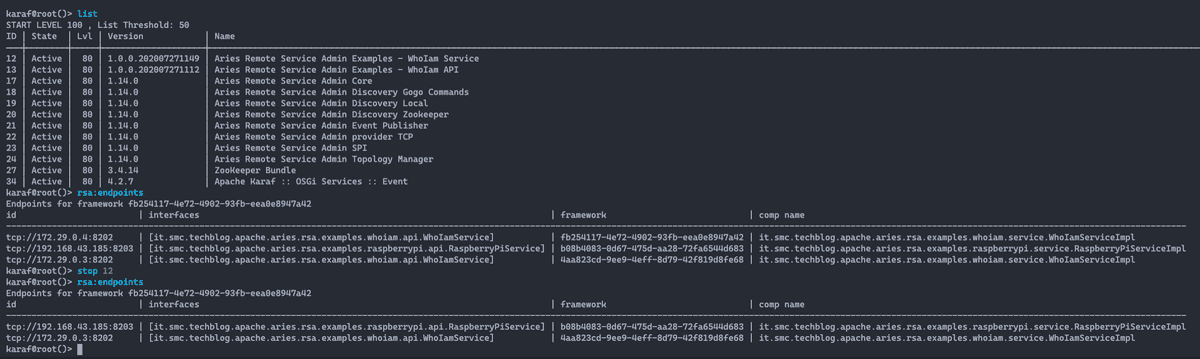

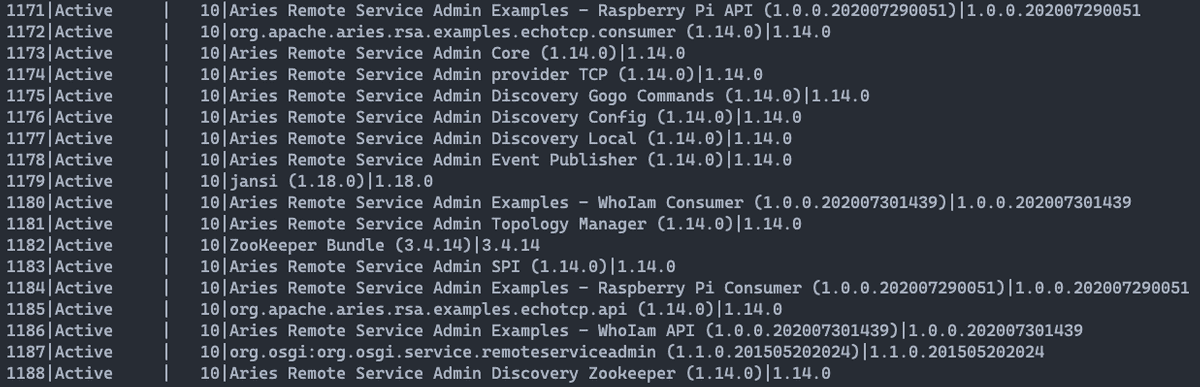

We do further checks to ensure that Apache Aries RSA e

Apache ZooKeeper plus the bundles of our services have been correctly

installed. To do this, simply connect to the Gogo Shell

of Liferay and use the ls command to verify that i

respective bundles and are in the Active state. The same activity can be

conducted on the Apache Karaf instances, connecting to the console and using

the list command to verify that the respective bundles are present and that

are also in the Active state.

The two figures below show the output of the ls and list commands for Gogo

Shell (Liferay) and Apache Karaf. For connection to the Apache Karaf console

use the command ssh -p 8104 karaf @ 127.0.0.1 Specify the port

of the instance you want to connect to (see Figure 10).

The password to access the Apache Karaf console is: karaf.

6. How to make Remote µServices

We have seen in detail everything that makes Remote µServices "magical" in chapter 4, where the OSGi Remote Services specification was introduced and how Apache Aries RSA makes it possible to export services outside the OSGi container they live in.

For those who develop, does anything change? Absolutely not, even more so for who uses the Declarative Services (or DS).

Let's take a look at the Who I am service. This service is very simple, through the interface it.smc.techblog.apache.aries.rsa.examples.whoiam.api.WhoIamService (see Source Code 2) the whoiam () method has been defined. This method returns some information about the runtime environment of the service. The implementation of this interface, as shown in Source Code 3, would not be candidate for export as a remote service. To make this happen, it is necessary to add properties to our component, as indicated following.

Using the property service.exported.interfaces=* we nominate the component to be exported as a remote service, you must also specify the port number via the aries.rsa.port= property. The latter configuration is specific to the type of transport chosen, in this case the TCP, as I anticipated in chapter 4 talking about Distribution Provider.

package it.smc.techblog.apache.aries.rsa.examples.whoiam.service;

import it.smc.techblog.apache.aries.rsa.examples.whoiam.api.WhoIamService;

import org.osgi.framework.Bundle;

import org.osgi.framework.BundleContext;

import org.osgi.framework.Constants;

import org.osgi.service.component.annotations.Activate;

import org.osgi.service.component.annotations.Component;

import org.osgi.service.component.annotations.Reference;

import org.osgi.service.log.LogService;

import java.net.InetAddress;

import java.net.UnknownHostException;

/**

* @author Antonio Musarra

*/

@Component(

property = {

"service.exported.interfaces=*",

"aries.rsa.port=8202"

})

public class WhoIamServiceImpl implements WhoIamService {

@Override

public String whoiam() {

Bundle bundle = _bc.getBundle();

String hostAddress = "NA";

try {

hostAddress = InetAddress.getLocalHost().getHostAddress();

}

catch (UnknownHostException uhe) {

_log.log(_log.LOG_ERROR, uhe.getMessage());

}

String response =

"Who I am : {Bundle Id: %s, Bundle Name: %s, Bundle Version: %s, Framework Id: %s, HostAddress: %s}";

return String.format(

response, bundle.getBundleId(), bundle.getHeaders().get(

Constants.BUNDLE_NAME), bundle.getVersion(),

_bc.getProperty(Constants.FRAMEWORK_UUID), hostAddress

);

}

@Activate

protected void activate(BundleContext bundleContext) {

_bc = bundleContext;

}

private BundleContext _bc;

@Reference

private LogService _log;

}For us developers, the "effort" is over here, you don't need to do anything else. Nothing changes for the consumer either (see Source Code 4), just enter annotation @Reference to the declared member of type WhoIamService, as always done. The Topology Manager will then inject the correct reference to the service remote, the one that lives inside the other OSGi container.

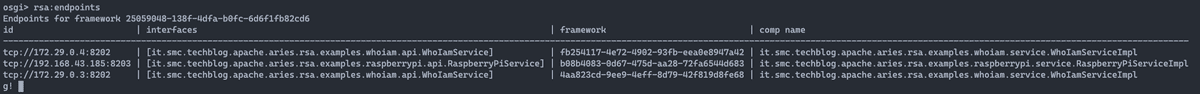

Let's connect to Liferay's Gogo Shell and see what we change below

than usual. With the installation of Apache Aries (RSA) we have to ours

available command to be able to query the Remote Service Admin and understand

which endpoints were created. The command is called rsa:endpoints e

the output of this to Liferay's Gogo Shell should look like

the one shown below.

The one shown in the figure corresponds to what I would have expected, and the numbers actually add up; perhaps someone could object. There are three endpoint. Why three endpoints if we have exported two services? It is It is true that the exported services are two but the Who I am service is installed on two different OSGi containers. The information the command returns is:

- id: identifier of the exported service expressed in URI format. Protocol, IP address of the service and port;

- interface: is the full name of the interface that the service implements;

- framework: is the identifier of the OSGi framework within which the service lives;

- comp name: is the name of the component that corresponds to the Java class that implements the interface and consequently the service.

Note that the framework identifier is different for each of the endpoints, correct, because they are services that live in three different OSGi containers.

By clicking on Console Session 2, you can see the terminal session where the registration process of the new endpoint concerning the service is highlighted of the Raspberry Pi. The new endpoint is in fact registered immediately after startup of the Apache Karaf instance installed on the Raspberry Pi.

How many times have you used the bundle or b command to do the inspection

any bundles? I guess several times, especially when there was to understand why

things didn't work out. Let's try running the b command on the del bundle

consumer of the Who I am service and let's see what the output will present.

Before running the bundle inspection command, let's use the command

lb it.smc.techblog to get the list of our bundles and then get

the id of the bundle we are interested in inspecting.How many times have you used the bundle or b command to do the inspection

any bundles? I guess several times, especially when there was to understand why

things didn't work out. Let's try running the b command on the del bundle

consumer of the Who I am service and let's see what the output will present.

Before running the bundle inspection command, let's use the command

lb it.smc.techblog to get the list of our bundles and then get

the id of the bundle we are interested in inspecting.

Once we get the bundle id, we run the command b 1180. The output

shown in the figure shows slightly different information than the

that is, the Services in use section shows the reference to the service

remote, from which we can see all the characteristics of the remote endpoint.

This is where Topology Manager and Remote Service Admin came into play.

In this case the binding was done with the remote service that lives on OSGi container which has the address 172.29.0.4.

What should I see by querying the OSGi framework via services command en.smc.techblog.apache.aries.rsa.examples.whoiam.api.WhoIamService? That that we get is the information that we have the availability of two services remotes that implement the same interface (in this case WhoIamService) but that only one of these is used by the consumer bundle.

What would happen if I stop the WhoIamService service tcp://172.29.0.4:8202 currently referenced by the consumer (bundle 1180)? Yes would it create a disservice? Absolutely not. What happens in short is this:

- stop the service on the karaf-instance-2_1 instance;

- removal of the endpoint tcp://172.29.0.4:8202 from ZooKeeper;

- the Discovery service will notify the change on the removed endpoint;

- The Topology Manager and the Remote Service Admin ensure that the reference requested by the service consumer WhoIamService is associated to the remote service of the karaf-instance-1_1 instance.

The figure below shows the endpoint verification on the instance karaf-instance-2_1, stop execution for the Who I am Service bundle (bundle 12) and the subsequent verification of the endpoints. After the bundle stop is evident how the endpoint tcp://172.29.0.4:8202 has been removed.

On the Liferay instance, if we went to check the endpoints, we will see that

the endpoint tcp://172.29.0.4:8202 e

running the services command again, we will see that the required reference

from the consumer to the Who I am service, it has been correctly changed to

the endpoint tcp://172.29.0.3:8202.

The two figures below show the changes that occurred on the Liferay side, the last one in particular, it shows the output of calling the whoiam () method of the service remote, where it is evident that the remote service that responded is what it does reference to the endpoint tcp://172.29.0.3:8202, in this case the instance karaf-instance-1_1.

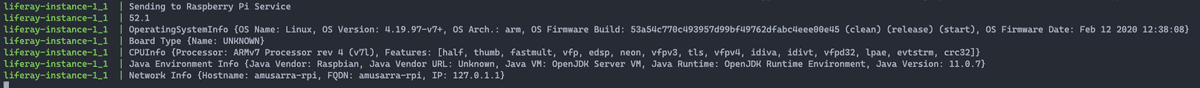

Even if we restart the bundle that was previously stopped, the consumer it would remain hooked to the current endpoint. We conclude this chapter with the figure below which shows the output of the call to the Raspberry Pi Service remote service , called by the Liferay instance.

7. Projects on SMC's GitHub account

I think it was a great effort to get here, but I'll leave you a lot gift. Everything you have seen in the course of this article it is available as a git repository. This project is organized in several repository and in particular:

- docker-osgi-remote-services-example : It is the repository that contains all the Docker Compose project there will allow us to come up with the solution represented by the diagram of Figure 4 (a less than the Apache Karaf instance on Raspberry Pi);

- aries-rsa-whoiam-examples : Maven project containing bundles for the Who I am Service (see Table 2);

- aries-rsa-raspberrypi-examples : Maven project which contains bundles for the Raspberry Pi Service (see Table 2).

The repositories of the two OSGi projects contain what we can define as bonuses, that is, two OSGi commands to interact with the Who I am service and with the service Raspberry Pi. I remind you that the OSGi commands are real commands that we can call from the OSGi container console.

- WhoIamConsumerCommand OSGi Command to interact with the Who I am service.

- RaspberryPiServiceConsumerCommand OSGi Command to interact with the Raspberry Pi service. For example, one of the commands returns the CPU temperature.

Each repository has inside the README with the basic instructions for use.

8. Conclusions

For the topics we have covered, this article is a consequence of the speech I gave at Liferay Boot Camp Summer Edition 2020, where I specifically discussed the Liferay platform within contexts of Microservices.

As much as I have tried to be concise, with this article I wanted demonstrate how the OSGi framework with its real µServices is capable of foster the development of lightweight, reliable and clean distributed systems.

I realize that more space would be needed, but with what I am managed to occupy I hope to have been clear in the exposition and to have aroused your curiosity on the subject.

I think I can say that it is difficult to find articles with this around the net level of detail and who have addressed the development of a practical solution making all the material available to you.

If you've made it this far, I guess you've also amassed a number of questions that I invite you to leave in the form of a comment on the article. Coming soon other in-depth articles will be published.

9. Resources

To follow I leave a series of resources that are the reference point for contents of this article.