This is the first in a series of articles covering that part of machine learning known as Natural Language Processing (NLP).

This article refers to several key concepts in Machine Learning.

Read the article Machine learning and applications for industry

for the main definitions.

With Natural Language Processing we refer to that interdisciplinary research field that embraces computer science, artificial intelligence and linguistics, whose purpose is to develop algorithms capable of analyzing, representing and therefore "understanding" natural language, written or spoken, in a similar or even more efficient way than human beings.

Huge amounts of text and speech content are generated and stored nowadays. Very often no use is made of this data, unaware of the fact that instead they represent an invaluable source of value, thanks to which it is possible to create tools and applications which can bring a considerable added value.

By exploiting the textual and vocal content available, it is possible, for example, to create tools for::

- Named Entity Recognition: recognize and extract entities and semantic information from the text.

- Text Classification: classify textual content, for example the sentiment analysis of a text (positive or negative).

- Entity linking: disambiguate the entities identified in the text (link the textual entities to identifying concepts).

- Topic Modeling: automatically extract the main topics present in a textual corpus.

- Autocompletion: autocompletion of a query for example.

- Machine translation: translation of textual content from one language to another.

- Speech Recognition: transformation of voice content into textual content (technology behind chatbots).

In this first article we will cover the task known as Named Entity Recognition (NER). We will see how it is possible to create a tool capable of recognizing entities in the text and what are some of its possible applications.

Named Entity Recognition

The Named Entity Recognition is placed within that subclass of task which in NLP is defined as Information Extraction. Through NER it is possible to identify entities in the text and associate them with the corresponding semantic categories such as persons, organizations, entities of geopolitical type, geographic, numbers, temporal expressions and so on.

We are talking about a task that has had a strong development in recent times, especially thanks to the advent of Deep Learning.

The use of very deep neural networks has greatly increased and improved the effectiveness of entity recognition tools.

Before the advent of Deep Learning, the most used tool was the so-called Hidden Markov Model,

a statistical model based on Markov chain, but that did not guarantee

the same performance as today's Deep Learning-based models.

Two examples of training models for entity recognition will be shown later in the article, both based on the use of algorithms

made with neural networks.

Annotation techniques and tools

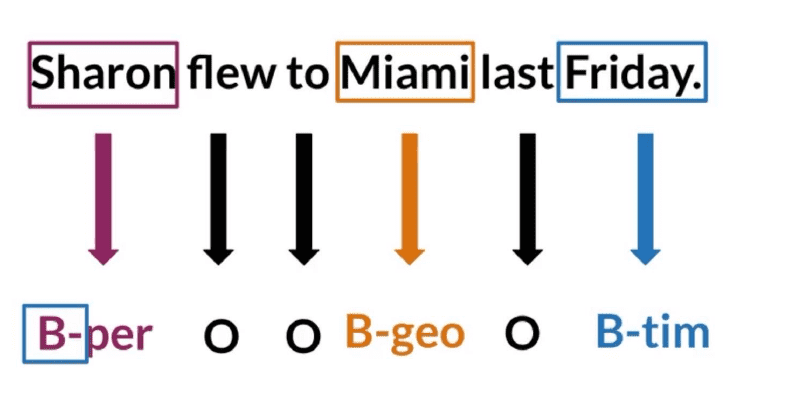

The task of the Named Entity Recognition is addressed through approaches of supervised type, and for this reason it is necessary to have a set of labeled data available in order to train a model to recognize entities. Each textual content must be labeled with the list of tokens and related tags for each entity that is to be recognized in the text.

There are several formats to represent a training dataset for Named Entity Recognition. Let's see the two most used.

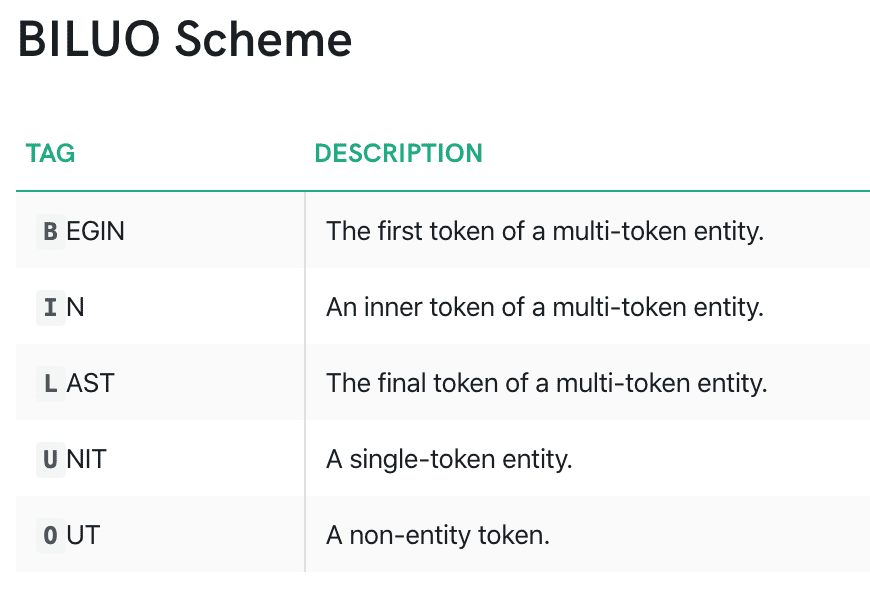

A first format is the one called BILUO scheme, where the different parts of the entities are mapped according to the scheme in Figure 2. Entities are tagged with the semantic category preceded by one of the defined prefixes.

The mapping between tokens and entities is then saved in a csv file, using a separate label for all tokens that do not fall within the semantic categories of reference.

The BILUO scheme is perhaps the most popular format.

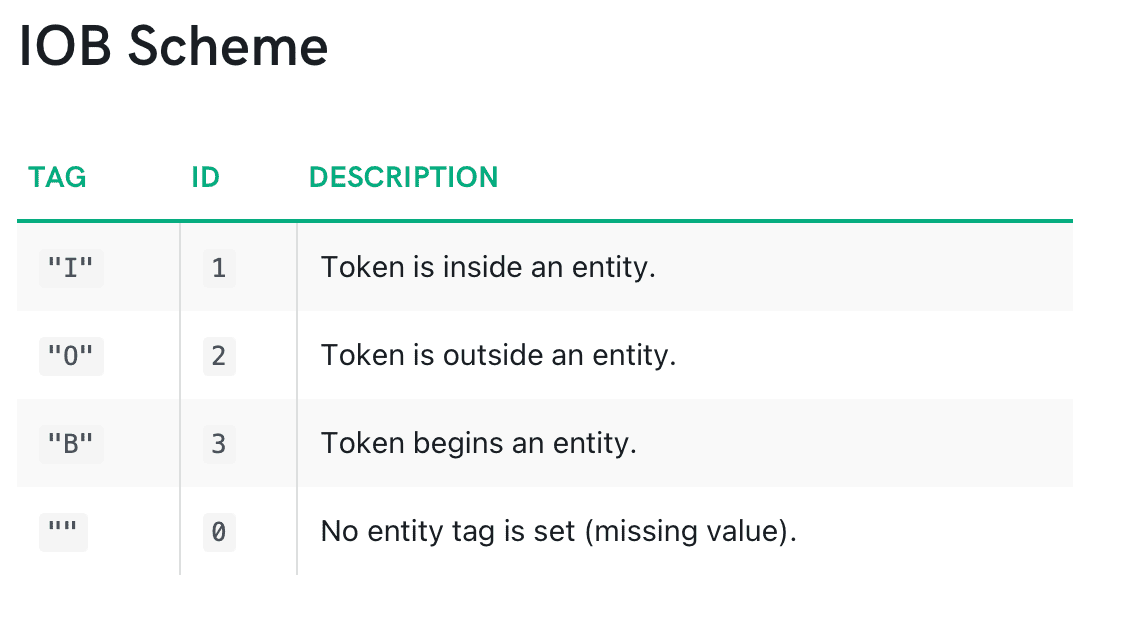

A second format is a simplified version of the BILUO scheme. It is called the IOB schema. This is a less fine-grained scheme, where

the prefix associated with the entity indicates only whether it is a token at the beginning or within the entity consisting of several words.

The scheme follows the specifications defined in the figure below.

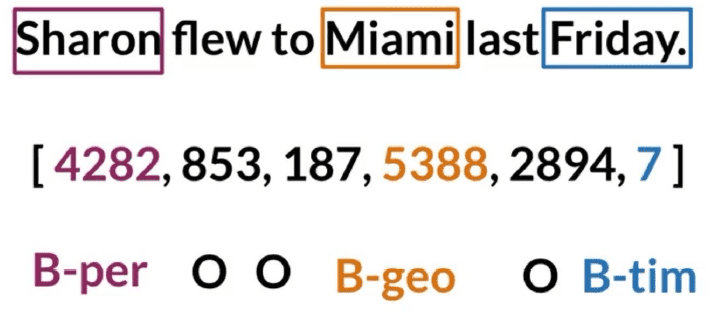

A third representation is in jsonl format. In this format, each textual content is associated with a list that indicates, for each entity, the position in the text and the associated semantic category.

{

"text": ""Sharon flew to Miami last friday"",

"entities": [(0, 5, "PERSON"), (15, 20, "LOC"), (26, 32, "DATE")]

}Experts identify the BILUO scheme as the best to make models for Named Entity Recognition as accurate as possible.

This is because, through the multi-prefix scheme, they specify more detailed information in the text, which improves the capabilities of

learning of the Machine Learning algorithm used.

But even using the IOB and jsonl format, very often it is possible to achieve noteworthy performance.

Switching between formats is relatively simple and can be accomplished by defining rules-based procedures

easy intuition.

In addition, many NLP libraries already define predefined functions to transform your dataset into the desired format.

To label and transform data into one of these formats, there are ad hoc tools called annotators . An annotator allows you to upload your data as plain text and then label it in a graphical environment which makes the annotation process more agile. Annotators allow you to label data for NER, but also for textual classification tasks rather than sequence-to-sequence tasks.

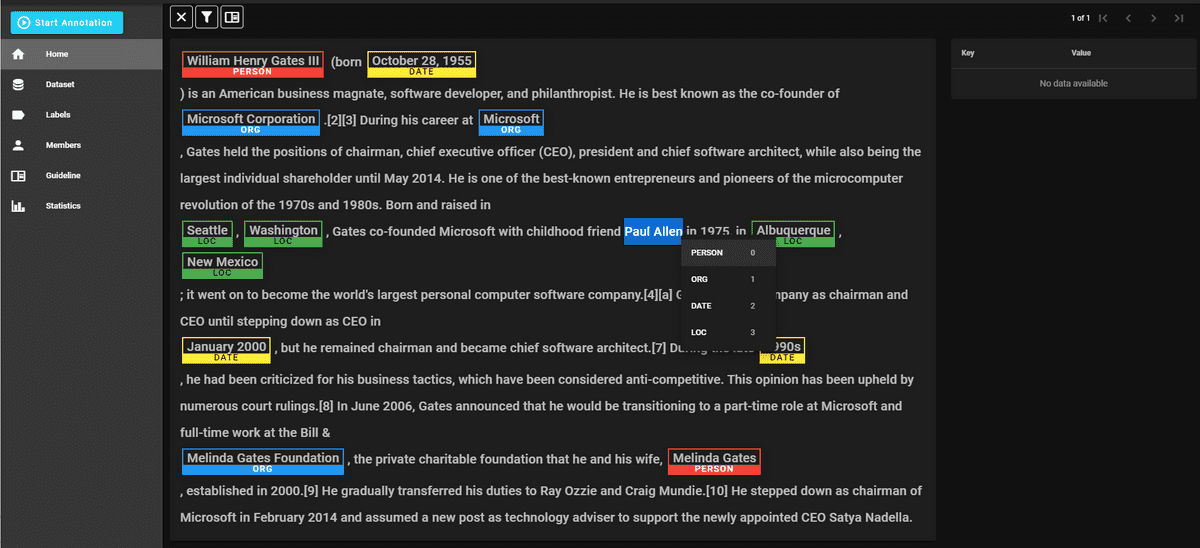

In the case of NER, an annotator presents a graphic like the one below in the figure, with functionalities that allow to carry out the annotation activity simply by highlighting the text and specifying the label to assign.

The example screen is from doccano, a well structured and open source annotator. Doccano, once labeled the data, allows you to export the dataset in jsonl format. To be mentioned, on the other hand, among the paid ones [ Prodigy ] (https://prodi.gy/), an advanced annotator, enhanced through the concept of active learning; this is developed by [Explosion.ai] (https://explosion.ai/), creators of the opensource library of NLP [ Spacy ] (https://spacy.io/).

Dataset

If you want to train models for the recognition of entities, one option is to first analyze some labeled datasets present at state of the art. These tend to be generic datasets, with entities labeled with semantic categories relating to personal names, organizations, locations, dates, temporal entities; however, it is also possible to find datasets labeled, concerning to more specific domains. These datasets are almost always in English, but very often this problem can be overcome by using machine translation tools before carrying out the activity of Named Entity Recognition, translating the content from the Italian language to the English language, and vice versa.

Here is a list of some of the tagged datasets on the net:

- Annotated Corpus for Named Entity Recognition: dataset in BILUO Schema containing textual content labeled with geographical, geopolitical, temporal entities, etc ...

- CoNLL 2003: dataset in BILUO Scheme containing news articles annotated with (LOC) locality, ORG (organizations), PER (people) and MISC (miscellaneous).

- Enron Email Dataset: more than 500,000 emails tagged with names, dates and time entities.

- OntoNotes 5: it is a dataset of news, telephone conversations, blog content tagged with entities of different kinds.

Overview of tools for Named Entity Recognition

At the state of the art there are many tools and libraries that deal with the creation and implementation of tools in the field of

Natural Language Processing. These tools provide both pre-trained templates that are easy to use and quickly integrate into your code, and the

possibility to train new ones with your own data, using predefined modules that simplify the training of new Machine Learning tools.

In the next section we will see how to train custom models on your own data.

Now let's see some easy-to-use tools that provide pre-trained models for recognizing entities in the text.

Spacy

[ Spacy ] (https://spacy.io/) is a Natural Language Processing framework, which deals with the development and implementation of Machine Learning techniques for many of the most popular nlp tasks. Spacy, a completely open source tool, also provides a list of pre-trained models, with support for different languages, through which can be performed on textual content some natural language processing tasks, such as entity recognition.

# installazione di spacy

pip install -U spacy

#download del modello

python -m spacy download it_core_news_sm# import

import spacy

# loading of choosen model

nlp = spacy.load("en_core_web_sm")

# running model on text and printing recognized entities

doc = nlp("Apple is looking at buying U.K. startup for $1 billion")

for ent in doc.ents:

print(ent.text, ent.start_char, ent.end_char, ent.label_)In these two code snippets we see how to install through pip in the your Python environment the library and download the chosen model. Subsequently, with two simple lines of code, you can load the model and run it on textual content.

Stanford NLP

Stanford NLP is a research group at Stanford University dedicated to NLP and which has created a suite of tools for various nlp tasks including Named Entity Recognition.

# import

import nltk

from nltk.tag.stanford import StanfordNERTagger

# text to processing

sentence = "Sharon flew to Miami last friday"

# jar of the model

jar = './stanford-ner-tagger/stanford-ner.jar'

model = './stanford-ner-tagger/ner-model-english.ser.gz'

# preparing ner tagger object with choosen jar

ner_tagger = StanfordNERTagger(model, jar, encoding='utf8')

# tokenization: splitting text

words = nltk.word_tokenize(sentence)

# performing ner tagger on words

print(ner_tagger.tag(words))In this snippet, the nltk library is used to tokenize the textual content and transform it into a wordlist. Subsequently this list is fed to the "ner tagger" which returns entities in output.

Training of custom models

We have seen how at state-of-the-art there are tools and models already trained and how these can be used to identify

entities on own textual content.

But in many cases they turn out to be insufficient, both because we want to identify entities relating to different semantic categories, and because the models

pre-trained are not suitable for the data source on which they want to perform the entity recognition activity.

In these cases it is more convenient to train your own models for Named Entity Recognition, using your own data, which are

been tagged with the help of annotators, as seen in the previous section.

Here are two examples of training custom models, through the use of the Spacy library and the Deep Learning libraryTensorflow.

Visit the Github repository where is presente the source code of the examples, described and executable in well documented jupyter notebook.

Spacy

Spacy, as already mentioned, is a Machine Learning library, completely dedicated to the development of tools for the different tasks of

Natural Language Processing. It is a well-structured framework with advanced features, which make it very suitable for production contexts.

In addition to tools already trained and quickly usable, as seen in the previous section, it provides a whole

series of apis and functions through which you can create and train your own models, quickly and efficiently, without having to worry about

having to manage all the lower level aspects, which usually must be addressed during training of a Machine Learning model.

Spacy provides pre-defined and configurable pipelines for many NLP tasks, including Named Entity Recognition. Let's see how to train quickly a model for this task with a few lines of code, without having to manage aspects concerning the definition of the model architecture, management data rather than model management.

In this first code snippet:

- the necessary libraries are imported

- a sample of the training data is defined in jsonl format, which in a real context should instead be read from a specific file with the training data

- a new model is initialized or an existing model is loaded from which to start training

- the "ner" pipeline in the model is initialized, if this is not already present

from __future__ import unicode_literals, print_function

import random

import warnings

import spacy

from spacy.util import minibatch, compounding

# training data

TRAIN_DATA = [

("Sharon flew to Miami last friday", {"entities": [(0, 5, "PERSON"), (15, 20, "LOC"), (26, 32, "DATE")]}),

("Daniele works in Rome for SMC Treviso", {"entities": [(0, 6, "PERSON"), (16, 20, "LOC"), (25, 36, "ORG")]}),

]

model=None

output_dir=None

n_iter=100

"""Load the model, set up the pipeline and train the entity recognizer."""

if model is not None:

nlp = spacy.load(model) # load existing spaCy model

print("Loaded model '%s'" % model)

else:

nlp = spacy.blank("en") # create blank Language class

print("Created blank 'en' model")

# create the built-in pipeline components and add them to the pipeline

# nlp.create_pipe works for built-ins that are registered with spaCy

if "ner" not in nlp.pipe_names:

ner = nlp.create_pipe("ner")

nlp.add_pipe(ner, last=True)

# otherwise, get it so we can add labels

else:

ner = nlp.get_pipe("ner")

# add labels

for _, annotations in TRAIN_DATA:

for ent in annotations.get("entities"):

ner.add_label(ent[2])The actual training logic is instead implemented in the following code.

Model pipelines are initially filtered, disabling any unnecessary ones to train a model

for the recognition of entities.

Then on the basis of the specified number of iterations, iterates over the data, which are divided into minibatches,

and update the parameters of the model iteration after iteration. The Dropout can be configured depending on

needs.

The example prints the model loss at the end of each training iteration.

# get names of other pipes to disable them during training

pipe_exceptions = ["ner", "trf_wordpiecer", "trf_tok2vec"]

other_pipes = [pipe for pipe in nlp.pipe_names if pipe not in pipe_exceptions]

# only train NER

with nlp.disable_pipes(*other_pipes), warnings.catch_warnings():

# show warnings for misaligned entity spans once

warnings.filterwarnings("once", category=UserWarning, module='spacy')

# reset and initialize the weights randomly – but only if we're

# training a new model

if model is None:

nlp.begin_training()

for itn in range(n_iter):

random.shuffle(TRAIN_DATA)

losses = {}

# batch up the examples using spaCy's minibatch

batches = minibatch(TRAIN_DATA, size=compounding(4.0, 32.0, 1.001))

for batch in batches:

texts, annotations = zip(*batch)

nlp.update(

texts, # batch of texts

annotations, # batch of annotations

drop=0.5, # dropout - make it harder to memorise data

losses=losses,

)

print("Losses", losses)Finally, to finish the training process, the model, in a very simple way, can be saved on disk, and in an equally simple way, loaded to be used in real contexts, by specifying the path where the model was saved.

# save model to output directory

if output_dir is not None:

output_dir = Path(output_dir)

if not output_dir.exists():

output_dir.mkdir()

nlp.to_disk(output_dir)

print("Saved model to", output_dir)

# test the saved model

print("Loading from", output_dir)

nlp2 = spacy.load(output_dir)

for text, _ in TRAIN_DATA:

doc = nlp2(text)

print("Entities", [(ent.text, ent.label_) for ent in doc.ents])

print("Tokens", [(t.text, t.ent_type_, t.ent_iob) for t in doc])In this example the architecture of the model is not defined in any way, nor the vocabulary at the base of the model is managed, as well as

the process of archiving and saving the model.

It's all handled by Spacy, which defines all the Named Entity Recognition logic in the reference pipeline.

This also allows those who are less experienced to be able to undertake the training of a model for such a task.

In any case, by investigating in depth the implementation of the pipeline, we find that the architecture used is that of a convolutive network with residual connections. These are convolutional networks which, similarly to what happens in pyramidal cells of our cerebral cortex, define residual connections with other parts of the neural network; for some NLP tasks these networks prove to perform very effectively and outperform other Deep Learning algorithms.

For further information and more details there is excellent documentation on the Spacy website, both regarding the

training procedure, both regarding the architecture used.

The configuration used can in many cases immediately lead to excellent performance; it is always recommended to carry out

an optimization of the parameters in order to identify the best configuration. For advice on how to perform

this optimization visit https://spacy.io/usage/training#tips.

Tensorflow

Tensorflow is one of the most used Deep Learning libraries both for experimentation and in context of production. It defines a multitude of tools and techniques commonly used to make Machine Learning models based on very deep neural networks. It is managed by Google and is completely open source.

Tensorflow allows to realize any model based on Deep Learning, giving the possibility to design networks both through very advanced techniques that allow the coding of the lower level aspects, both using predefined layers to create your own neural network with the architecture that best suits your needs, configuring the different layers and training logic to your needs, looking for the best performance.

In Spacy we are in fact forced to use the architecture chosen by the developers, being able to act in some of its configuration parameters, but without

being able to define the training algorithm in detail.

The architecture provided by the Spacy tool in many cases can prove to be the best,

but it could also be that, due to a certain data source and the nature of the entities to be recognized,

there is a better algorithm than the convolutional networks chosen in Spacy.

With Tensorflow, as mentioned, any algorithm and model can be created, based on Deep Learning. So let's see how to define a model

for Named Entity Recognition using Tensorflow Keras and

bidirectional recurrent neural networks of type

[LSTM] (https://en.wikipedia.org/wiki/Long_short-term_memory).

The recurrent neural networks are well suited where it is important to identify relationships between features for the task to be performed. And for this reason

they are widely used for NLP tasks, where relationships in the text are very important, such as entity recognition.

# import

import pandas as pd

import numpy as np

import pickle

import tensorflow as tf

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.utils import to_categorical

from tensorflow.keras import Model, Input

from tensorflow.keras.layers import LSTM, Embedding, Dense

from tensorflow.keras.layers import TimeDistributed, SpatialDropout1D, Bidirectional

from tensorflow.keras.callbacks import EarlyStopping

from sklearn.model_selection import train_test_splitHaving to define the logic at a lower level, data management must also be done in a different way, managing aspects that

in Spacy they are managed by the library itself.

The following snippet reads the training data, in BILUO format, into a pandas dataframe. The lists are then created without duplicates of the

token and tags present in the dataset. Finally, according to the logic defined by the SentenceGetter class, the dataset is splitted

into different sentences, and vocabularies that map word and tag lists to numeric identifiers are created.

# class to split dataset in sentences

class SentenceGetter(object):

def __init__(self, data):

self.n_sent = 1

self.data = data

agg_func = lambda s: [(w, t) for w, t in zip(s["Word"].values.tolist(), s["Tag"].values.tolist())]

self.grouped = self.data.groupby("Sentence #").apply(agg_func)

self.sentences = [s for s in self.grouped]

# reading csv in pandas dataframe

data = pd.read_csv("data/biluo_train_data.csv", encoding='latin1')

data.fillna(method='ffill')

data.head(20)

print("Unique words in corpus:", data['Word'].nunique())

print("Unique tags in corpus:", data['Tag'].nunique())

# defining list of words

words = list(set(data["Word"].values))

words.append("ENDPAD")

num_words = len(words)

# defining list of tags

tags = list(set(data["Tag"].values))

num_tags = len(tags)

print (num_words, num_tags)

# splitting dataset in sentences

getter = SentenceGetter(data)

sentences = getter.sentences

# defining dictionaries to mapping words and tags to integers

word2idx = {w: i+1 for i, w in enumerate(words)}

tag2idx = {t: i for i, t in enumerate(tags)}At this point the vocabularies created are used to map each textual content on a sequence of integers, thus being able to

give these as input to the neural network defined for the training epochs.

Once this is done, the sets of data and associated tags are splitted into a training set and a test set, which will be used

for the evaluation of the trained model.

# max len of sentencess

max_len = 400

# for every sentence mapping words to integer id based on dictionary word2idx and padding every sequence to same length

X = [[word2idx[w[0]] for w in s] for s in sentences]

X = pad_sequences(maxlen=max_len, sequences=X, padding='post', value=num_words-1)

# for every sentence mapping tags to integer id based on dictionary tag2idx and padding every sequence to same length

y = [[tag2idx[w[1]] for w in s] for s in sentences]

y = pad_sequences(maxlen=max_len, sequences=y, padding='post', value=tag2idx["O"])

y = [to_categorical(i, num_classes=num_tags) for i in y]

# splitting dataset in train and test set

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.1, random_state=1)

At this point the architecture of the model is defined. It is a network with an initial Embedding layer, a bidirectional LSTM layer and finally a TimeDistributed layer, which defines a fully connected layer on the same time windows. For further information on the nature of the different layers please refer to the official documentation.

The model is then trained over a number of epochs, using EarlyStopping as a callback that monitors the training and stops it if the network begins to suffer from overfitting, i.e. excessive adaptation of the training data, with consequent loss of accuracy on the test data.

input_word = Input(shape=(max_len,))

model = Embedding(input_dim=num_words, output_dim=max_len, input_length=max_len)(input_word)

model = SpatialDropout1D(0.1)(model)

model = Bidirectional(LSTM(units=100, return_sequences=True, recurrent_dropout=0.1))(model)

out = TimeDistributed(Dense(num_tags, activation='softmax'))(model)

model = Model(input_word, out)

model.summary()

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

early_stopping = EarlyStopping(monitor='val_loss', min_delta=0, patience=10, verbose=0, mode='auto', baseline=None,

restore_best_weights=False)

callbacks = [early_stopping]

history = model.fit(

x_train, np.array(y_train),

validation_split=0.2,

batch_size=32,

epochs=30,

verbose=1)Finally the model is saved on disk, storing every component necessary for future use. Not only the model is saved, but also all the vocabularies necessary for data transformations.

with open('models/bilstm/word2idx.pickle', 'wb') as handle:

pickle.dump(word2idx, handle, protocol=pickle.HIGHEST_PROTOCOL)

with open('models/bilstm/tag2idx.pickle', 'wb') as handle:

pickle.dump(tag2idx, handle, protocol=pickle.HIGHEST_PROTOCOL)

with open('models/bilstm/words.pickle', 'wb') as handle:

pickle.dump(words, handle, protocol=pickle.HIGHEST_PROTOCOL)

with open('models/bilstm/tags.pickle', 'wb') as handle:

pickle.dump(tags, handle, protocol=pickle.HIGHEST_PROTOCOL)

# Saving Model Weight

model.save('models/bilstm/bilstm.h5')To reuse the model just load it specifying the path where it was stored, importing the dictionaries as well. We also define a function to tokenize text using nltk.

import nltk

# Custom Tokenizer

def tokenize(s): return nltk.word_tokenize(s)

sentence = "Sharon flew to Miami last friday"

max_len = 400

# loading dictionaries

with open(ROOT_FOLDER + '/bilstm_3/word2idx.pickle', 'rb') as handle:

word2idx = pickle.load(handle)

with open(ROOT_FOLDER + '/bilstm_3/tag2idx.pickle', 'rb') as handle:

tag2idx = pickle.load(handle)

with open(ROOT_FOLDER + '/bilstm_3/words.pickle', 'rb') as handle:

words = pickle.load(handle)

with open(ROOT_FOLDER + '/bilstm_3/tags.pickle', 'rb') as handle:

tags = pickle.load(handle)

# loading model

model = load_model(ROOT_FOLDER + '/bilstm_3/bilstm_3.h5')At this point we tokenize the text, pad it to the specified maximum length and input it to the model, that will return the tag and associated probability for each token.

# Tokenization

test_sentence = tokenize(sentence)

# Preprocessing

x_test_sent = pad_sequences(sequences=[[word2idx.get(w, 0) for w in test_sentence]],

padding="post", value=len(words)-1, maxlen=max_len)

# Predicting tags and probabilities

p_pred = model.predict(np.array([x_test_sent[0]]))

p = np.argmax(p_pred, axis=-1)

prediction = []

# Visualization

for w, pred, probs in zip(test_sentence, p[0], p_pred[0]):

prediction.append((w, tags[pred], probs[pred]))As mentioned, use Tensorflow, rather than another Deep Learning library, to train models for this task,

generally, is more complex and requires more knowledge of all the basic aspects of Machine Learning.

In many cases, tools like Spacy allows you to reach very high levels of performance, but if you can't

to achieve noteworthy performance, then tools like Tensorflow can be used to research the neural network and

configuration that best suits your case.

Applications of NER

The ability to intelligently and effectively identify entities in the text allows us to tag textual content with those that can be relevant concepts for the content itself. This translates into the possibility of creating or improving a series of possible applications.

🔍 Research systems

Through the use of NER it is possible to improve both efficiency and effectiveness of the research systems.

In efficiency, by actually carrying out searches directly on the list of tags or entities associated with the different contents,

instead of doing a full text search.

In effectiveness, allowing you to carry out targeted searches on certain types of entities.

For example, if i want to search for documents that refer to a particular person or organization, i can recognize through

the NER these types of entities, and map them on specific fields, actually carrying out a search that filters based on the type of category and

specified value.

Or for example, tag past emails, with people and email addresses, also specifying their context.

An application of this kind can be very important in an enterprise context, with the aim of searching quickly and effectively,

the contents of a company, of an employee rather than of a customer.

☎️ Customer Service

The customer care of companies is having to navigate hundreds or thousands of support messages per day. The ability to tag the different incoming messages and tickets, can greatly speed up the ability to send correctly and quickly tickets to departments specialized in dealing with the specific type of problem.

👀 Recommendation systems

In recommendation systems it is essential to analyze what a user explores, and in the case of a textual recommendation system, as can be a news recommendation tool for example, the ability to recognize entities in the text allows to analyze the contents that the user visits, in order to then recommend similar ones.