This article is part of the series dedicated to the most relevant Natural Language Processing tasks. Learn more also the others starting from first article.

Further reading that I recommend, concerns an introduction on the basic aspects of Machine Learning and specifically Machine learning and applications for industry.

What is Topic Modeling

With Topic Modelling we refer, in fact,

to a specific NLP (Natural Language Processing) that allows you to automatically identify

the main topics covered in a certain documentary corpus.

It is a task that follows an unsupervised approach,

and as such it does not need a dataset labeled.

This makes Topic Modeling easy to make and apply.

Each identified topic is described through a series of relevant tokens and/or phrases, ordered by importance, which describe its nature.

In this context, each document is described by one or more topics. It is assumed that each document is represented by a statistics distribution

in the topic space, which can be obtained by "summing" all the distributions for all the topics covered.

The two main methods for implementing Topic Modeling approaches are:

Let's see how to implement Topic Modeling approaches. We will proceed as follows:

- Reading and preprocessing of textual contents with the help of the library NLTK

- Construction of a Topic Model using the Latent Dirichlet Allocation technique, through the use of library Gensim

- Dynamic display of the result through the library pyLDAvis

In the Github repository linked to the NLP article series there is a

Jupyter Notebook

describing the whole process.

For this example, a news headline dataset is used, downloadable from

Kaggle in json format.

In the repository, for reasons of space, there is only a small subset (in csv format) of the entire dataset, with news from specific categories.

To use the entire dataset, download it and use the utility

present in the repository to transform it into csv format.

Preprocessing and transformation of contents

A phase of preprocessing and transformation of the contents is initially carried out, to put the dataset in the best shape for training the model.

In Topic Modeling it is good practice to carry out the following transformation steps.

1. Tokenization

Each document must be transformed into a token list. To do this we define a specific function, using the Tokenizer of the NLTK library.

nltk.download('punkt')

def tokenize(text):

tokens = nltk.word_tokenize(text)

return tokens2. Elimination of stopwords

The stopwords in a task like Topic Modeling must be eliminated, as they will not represent in any way

relevant information for the identification and description of topics.

We use the stopwords defined by the NLTK library and define a function that specifies whether a token is a stopword.

nltk.download('stopwords')

en_stop = set(nltk.corpus.stopwords.words('english'))

def is_stopwords(token):

if token in en_stop:

return True

return False3. Lemmatization

It is very useful to trace each token of each document to its root. Otherwise we will have syntactically different tokens,

but which express the same semantic concept.

To carry out this process we define a function that returns the root of a word, and to do this we use the NLTK version of the

Stemmer WordNetLemmatizer.

nltk.download('wordnet')

from nltk.stem.wordnet import WordNetLemmatizer

def get_lemma(token):

return WordNetLemmatizer().lemmatize(token)Let's put it all together in a single function that will be used for the transformation of each content of the dataset.

In addition to the above operations, we transform each token into lowercase characters and eliminate those

of length less than or equal to 3.

def transform(text):

tokens = tokenize(summary)

tokens = [token.lower() for token in tokens]

tokens = [token for token in tokens if len(token) > 3]

tokens = [token for token in tokens if token not in en_stop]

tokens = [get_lemma(token) for token in tokens]

return tokensAt this point we can read the content of our csv file, extract the titles into a list and transform each content in the desired shape.

data.read_csv("../data/data.csv")

text_list = data["headline"].tolist()

cleaned_summary_list = [transorm(element) for element in text_list]LDA with Gensim

Dictionary and Vector Corpus

To build our Topic Model we use the LDA technique implementation of the Gensim library.

As a first step we build a vocabulary starting from our transformed data. Follows data transformation

in a vector model of type Tf-Idf.

We save the dictionary and corpus for future use.

dictionary = corpora.Dictionary(cleaned_summary_list)

corpus = [dictionary.doc2bow(text) for text in cleaned_summary_list]

tfidf = models.TfidfModel(corpus)

corpus_tfidf = tfidf[corpus]

pickle.dump(corpus_tfidf, open('../models/corpus.pkl', 'wb'))

dictionary.save('../models/dictionary.gensim')Model training

Once our corpus has been transformed, we move on to training the Topic Model.

In the application of the LDA technique, among the various parameters that can be specified, there are some of the greatest relevance:

- corpus: Vector corpus of documents to be used for training

- num_topics : Number of topics to extract

- id2word : Dictionary that defines the mapping of ids to words

- passes : Number of steps through the entire corpus during training

There are other parameters that we do not specify for simplicity and for which we use the default values.

For all information about the LDA model, see the document Latent Dirichlet Allocation.

At this point we move on to train our model.

import gensim

num_topics = 5

ldamodel = gensim.models.ldamodel.LdaModel(corpus, num_topics=num_topics, id2word=dictionary, passes=15)

ldamodel.save('model5.gensim')Once the training is finished, we save the model and print for each topic identified a representation given by the most relevant keywords belonging to the topic.

topics = ldamodel.print_topics(num_words=5)

for topic in topics:

print(topic)

(0, '0.016*"shooting" + 0.012*"police" + 0.009*"killed" + 0.006*"prison" + 0.006*"photo" + 0.005*"suspect" + 0.005*"school" + 0.005*"arrested" + 0.005*"killing" + 0.005*"olympics"')

(1, '0.023*"apple" + 0.013*"space" + 0.012*"iphone" + 0.011*"photo" + 0.009*"video" + 0.008*"planet" + 0.007*"rumor" + 0.007*"science" + 0.006*"first" + 0.006*"earth"')

(2, '0.009*"found" + 0.007*"allegedly" + 0.007*"police" + 0.007*"woman" + 0.006*"study" + 0.006*"video" + 0.005*"player" + 0.004*"arrested" + 0.004*"football" + 0.004*"google"')

(3, '0.010*"facebook" + 0.010*"state" + 0.008*"video" + 0.006*"people" + 0.006*"world" + 0.006*"google" + 0.006*"final" + 0.005*"tournament" + 0.005*"player" + 0.004*"score"')

(4, '0.053*"video" + 0.015*"watch" + 0.010*"youtube" + 0.007*"show" + 0.005*"photo" + 0.005*"tiger" + 0.005*"world" + 0.005*"touchdown" + 0.004*"study" + 0.004*"lebron"')Using the trained model

We then trained a Topic Model, and saved the result along with the corpus and the dictionary. This way we can

reuse our model in our code to extract the topics covered in a new document.

We input a test title to the model and obtain as a result a distribution in the topic space.

new_headline = 'Two person murdered in New York'

new_headline = transform(new_headline)

new_headline_bow = dictionary.doc2bow(new_doc)

print(ldamodel.get_document_topics(new_doc_bow))

[(0, 0.3996785),

(1, 0.06758379),

(2, 0.39854106),

(3, 0.06670547),

(4, 0.06749118)]At this point, let's extract the topic with the highest score. In this case it is the topic with id 0. We then return the representation through the main keywords.

ldamodel.print_topic(0, topn=5)

'0.016*"shooting" + 0.012*"police" + 0.009*"killed" + 0.006*"prison" + 0.006*"photo" + 0.005*"suspect" + 0.005*"school" + 0.005*"arrested" + 0.005*"killing" + 0.005*"olympics"'Model update

In a real context, we expect to have new contents available over time, which they represent

useful information to improve the performance of the model.

We may choose to retrain the model from scratch, using the completed corpus with the new content.

The Gensim library, however, also offers us the possibility to retrain the model in a partial and faster way.

By loading the most recent model from file, we can create the corpus with the new documents and then make an update of the model, which we can save

and use instead of the previous version.

temp_file = "../models/model.h5"

# Load a potentially pretrained model from disk.

lda = models.LdaModel.load(temp_file)

new_data = df.read_csv("../data/new_data.csv")

new_text_list = new_data["headline"].tolist()

new_cleaned_summary_list = [transform(element) for element in text_list]

dictionary = corpora.Dictionary(new_cleaned_summary_list)

new_corpus = [dictionary.doc2bow(text) for text in cleaned_summary_list]

tfidf = models.TfidfModel(new_corpus) # step 1 -- initialize a model

corpus_tfidf = tfidf[new_corpus]

lda.update(corpus_tfidf)Visualization with pyLDAvis

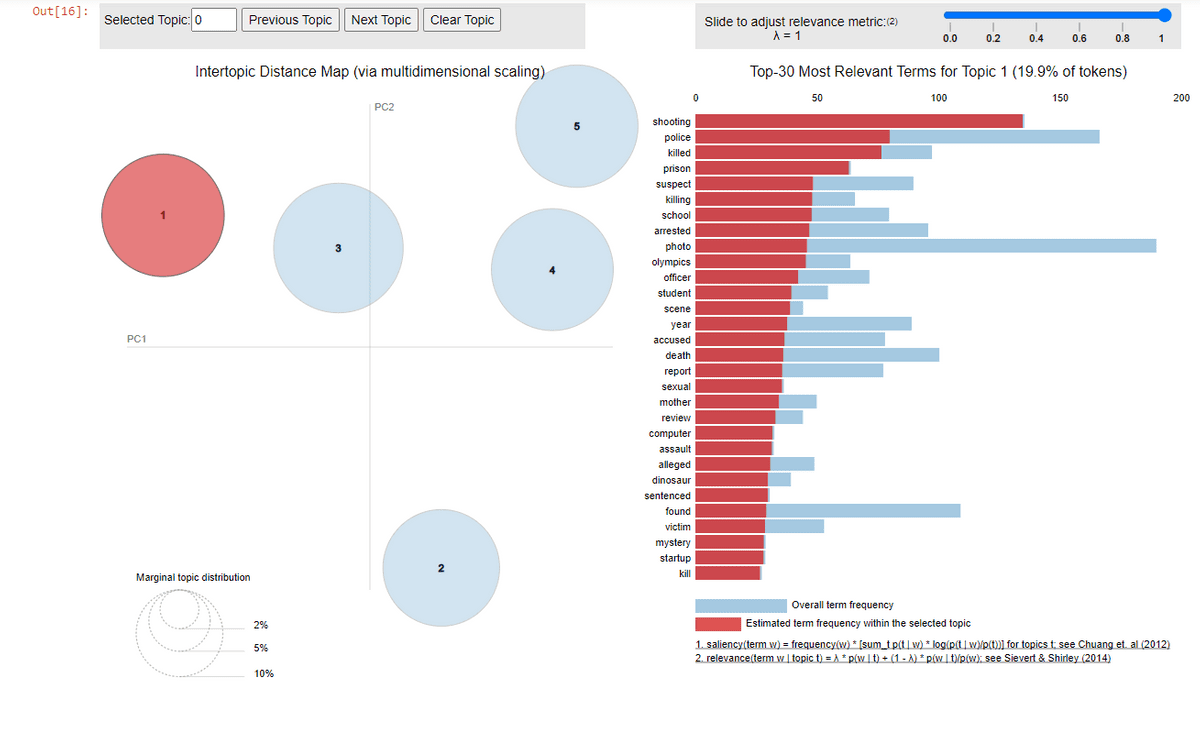

PyLDAvis allows you to view those on an interactive environment (a Jupyter Notebook for example) which are the topics identified by the Topic Model created. We load the newly trained model, together with the vector representation of the corpus and the dictionary, and we ask pyLDAvis to display the results.

import pyLDAvis

import pyLDAvis.gensim_models as gensimvis

pyLDAvis.enable_notebook()

dictionary = gensim.corpora.Dictionary.load('dictionary.gensim')

corpus = pickle.load(open('corpus.pkl', 'rb'))

lda = gensim.models.ldamodel.LdaModel.load('model5.gensim')

lda_display = gensimvis.prepare(lda, corpus, dictionary, sort_topics=False)

pyLDAvis.display(lda_display)Through the display method of the library, we generate an interactive panel that displays, on the left a representation in the topic space, on the right a view on which are the main concepts and keywords for the selected topic.

The topics that are close to each other or overlap will be similar topics.

It is possible to interact with the panel through appropriate commands that allow you to:

- Choose which and how many topics to display

- Set the appropriate Saliency, that is the measure of how much the term tells you about that topic

- Set the appropriate Relevance, that is a measure of the importance of the word for that topic, compared to the rest of the topics.

Conclusions

We have seen how Topic Modeling is a very simple and easy technique to apply, since it is not

need for labeled data. All you need is a corpus of documents you can access, on which you can train your model.

We have also seen how very simple it is to retrain a model with the new acquired data. Very often this can be done

through very rapid and frequent refinements of the model considering only the new data, and only at wider intervals to decide

to retrain the model from scratch.

This technique, as we have seen, gives as a result, given a document, the topics covered, and for each of these,

you can get the main keywords that describe it.

In this way we obtain information in an automatic and intelligent way on the nature of the analyzed text. All this can

be very useful for applications such as:

- cognitive search engines: the contents are tagged with the keywords of the main topics, to then exploit them in the research phase

- customer service: it is possible to identify the main topics discussed by customers and understand their needs more easily and quickly

- social network: to identify the main topics covered by users

- sentiment analysis: to identify concepts that are an expression of the sentiment of the content