Research represents a task of fundamental importance in multiple work and non-work contexts; the main goal of a search system is

to satisfy the user's needs as precisely and efficiently as possible, identifying the most relevant results based on the request.

With this objective, the research systems have evolved more and more in the latest years.

At the state of the art today we talk about cognitive search engines.

In the following we will go to see what a cognitive search system is, and then move on to describe and explore the functioning of the cognitive search solution Openk9.

What is cognitive research

Organizations nowadays have to manage content from multiple data sources. As for example:

- Mail server

- Web portals

- Crm

- etc....

These contents are also very often of different types. We can have documents, emails, contacts, calendars, tickets, and much more.

Last but not least, these contents can be both structured and unstructured in nature.

A first objective that a modern search system must satisfy is that of centralizing the search towards contents of different origin and typology and nature in a single system that allows searching for all these contents through a single access point and in a similar way.

But that's not it. The whole world of computer systems is increasingly influenced and extended thanks to the use of modern techniques Artificial Intelligence and Machine Learning. The research systems are no exception. Cognitive research systems are born from this union.

Wanting to define a cognitive research system, we can say that:

a cognitive search system is an advanced search system that uses ML capabilities to enrich content and support the user in research, with the primary goal of increasing the accuracy of the results

Therefore a fundamental role in these systems is played by the functionalities of AI and ML. In particular, as stated in the definition given, they are used for two very specific purposes:

- enrich the contents indexed by the cognitive search system

- support the user in the research in achieving his intentions

In the following, the operation of the Openk9 solution will be illustrated, and it will be clearer how the data enrichment activity works and is managed.

In this phase, however, it is of interest to give an overview of some of the useful and frequently used techniques for the aforementioned phases.

Machine Learning techniques for enrichment

What does data enrichment mean?

With data enrichment we refer to the analysis and expansion of the content of interest, which when it is indexed by the engine

of cognitive research, is analyzed, with the aim of extracting additional and "hidden" information, which may prove useful for increasing

precision in the research activity.

This information, once extracted, is linked to the original data, and the enriched data is indexed. The information deriving from the activity of

enrichment to this can be used to make more powerful and precise searches.

Before looking at some of the techniques useful for enrichment, it is good to point out that a cognitive research system must be able to support

the use of any technique useful for enriching the data, as these may vary according to the needs and nature of the data.

In this context we are going to see some Natural Language Processing techniques that are obviously useful in the case of enriching textual content, certainly the most commonly sought after.

A series dedicated to the most relevant tasks of Natural Language Processing is present in our blog. Some of the techniques listed here are addressed by this series of articles. Start reading from first article.

Commonly used techniques are:

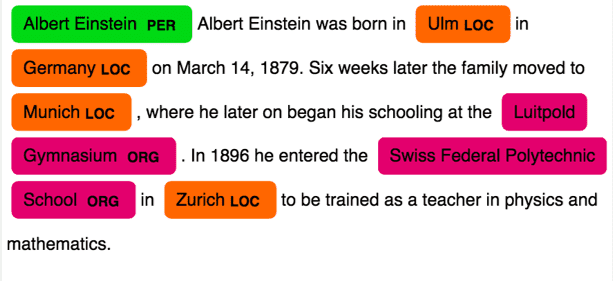

- Named Entity Recognition: it is about recognizing entities and semantic concepts in the text. These entities can then be used to perform more complex and precise searches.

Extractions of relationships: Extractions of relationships between entities.

Textual classification: intelligently categorize the text and use this information to search for content by category

Topic Modeling: identify the main topics of a content and exploit this information to search for content dealing with a sure topic

If the data to be ingested were of another nature, information extraction techniques suitable for the use case can be used. For sources with multimedia data such as audio, images rather than video, tools that implement Machine techniques can be used Learning such as Speech Recognition, Object Detection, Face Recognition, rather than Activity Recognition.

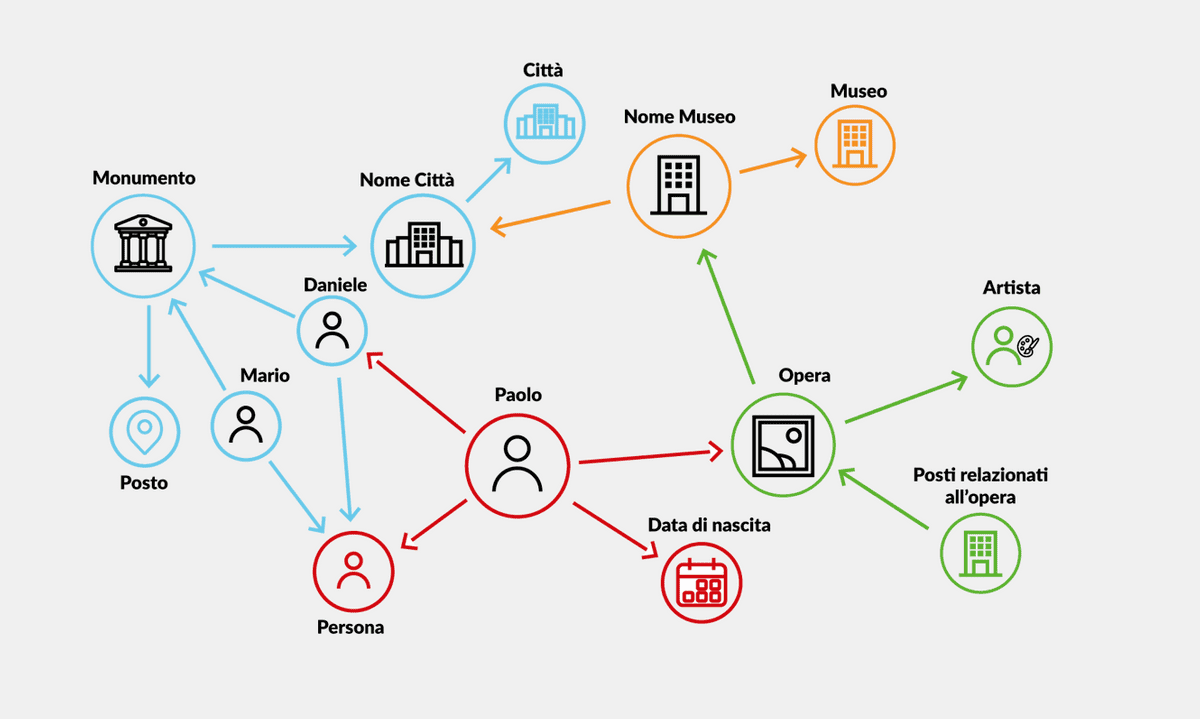

All the information extracted during the enrichment phase also contribute to the population of a relationship graph that is used by the search system to carry out searches, analytics and actions that increase the capabilities of the search engine.

Techniques for user support for research

The information resulting from enrichment is an extremely relevant source of data for research purposes. A cognitive search system uses this information to:

- provide suggestions to the user while writing the query

- formulate syntactically correct queries (self-correction)

- carry out activities to understand the query, with the aim of identifying the intent of the users. This activity helps build a representation of the query that provides more accurate results

The first two points are met through the use of modern NLP techniques for carrying out self-completion activities and autocorrection.

To contribute to the realization of the comprehension activity, on the other hand, there are different functionalities, in any case always linked to the modern techniques of Natural Language Processing.

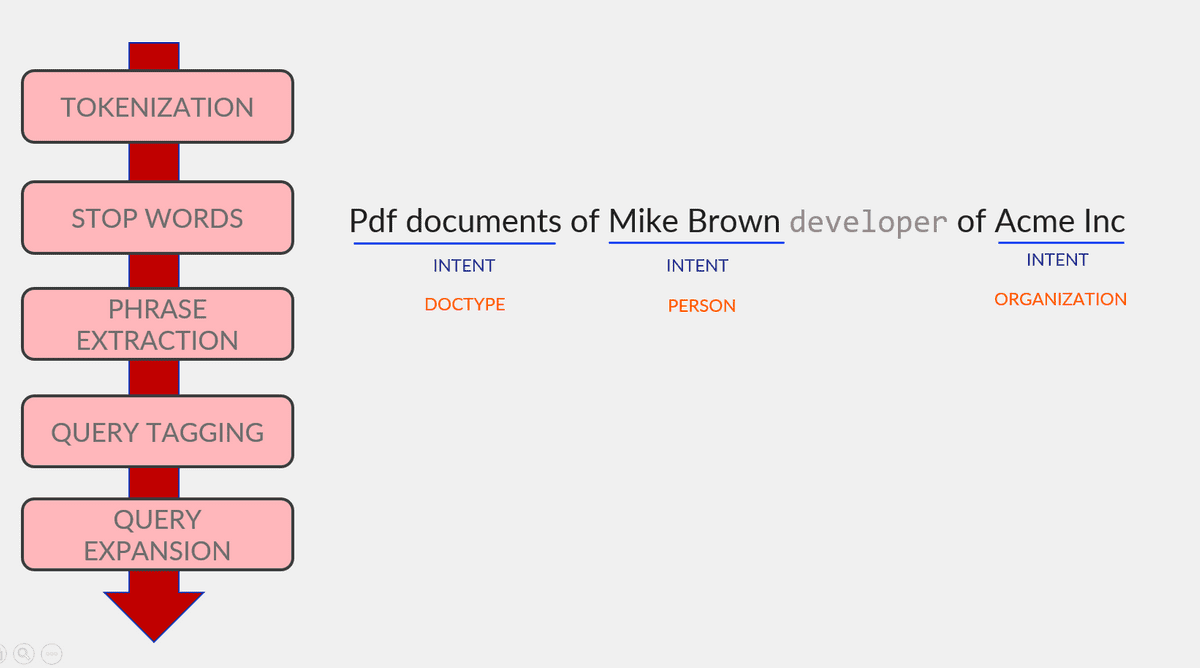

A rough diagram on the flow achieved by the query understanding activity is the following.

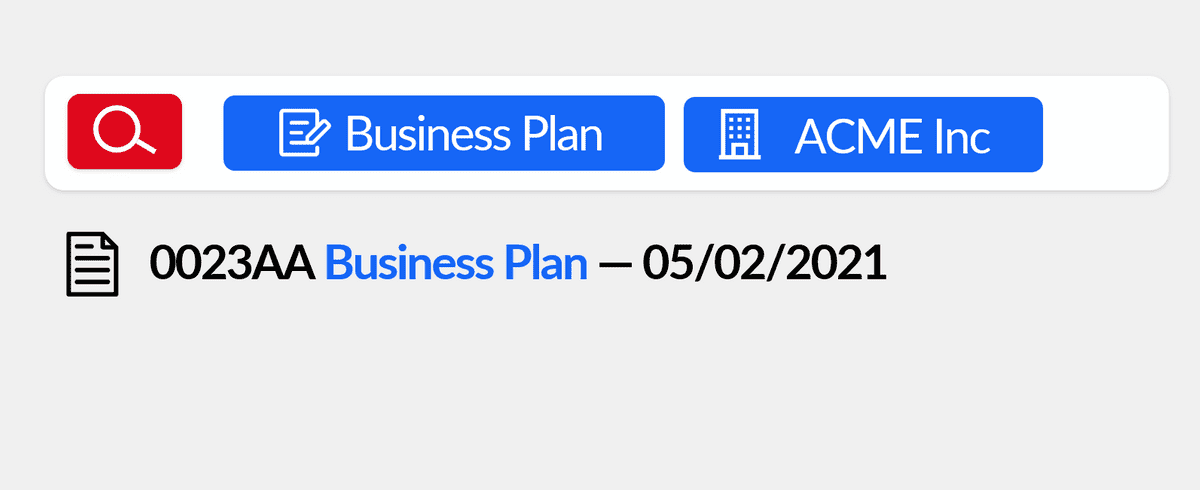

All activities are carried out through intelligent tools that increase the meaning of the query with appropriate indications. Let's look at the query example pdf documents by Mike Brown of Acme Inc. The query understanding tool then performs:

- appropriate cleaning activities through for example tokenization or elimination of stopwords

- identifies phrases with a relevant meaning within the query

- then carries out a concept tagging activity, identifying for example that Mike Brown is a person, pdf a specific type of document, rather than Acme inc a certain organization

- expands the meaning of the query, through the use of the knowledge base, noting that Mike Brown of Acme Inc is a developer

All this information is included in the description of the query made to the search system, thus obtaining more relevant results possible. In this case, giving greater importance to pdf documents with the developer Mike Brown of Acme Inc.

Openk9

Openk9 is an Open Source cognitive research solution. The code is then available on Github.

Documentation and in-depth information are available on the Openk9 website.

Let's see how Openk9 realizes what are the main characteristics of a cognitive search system.

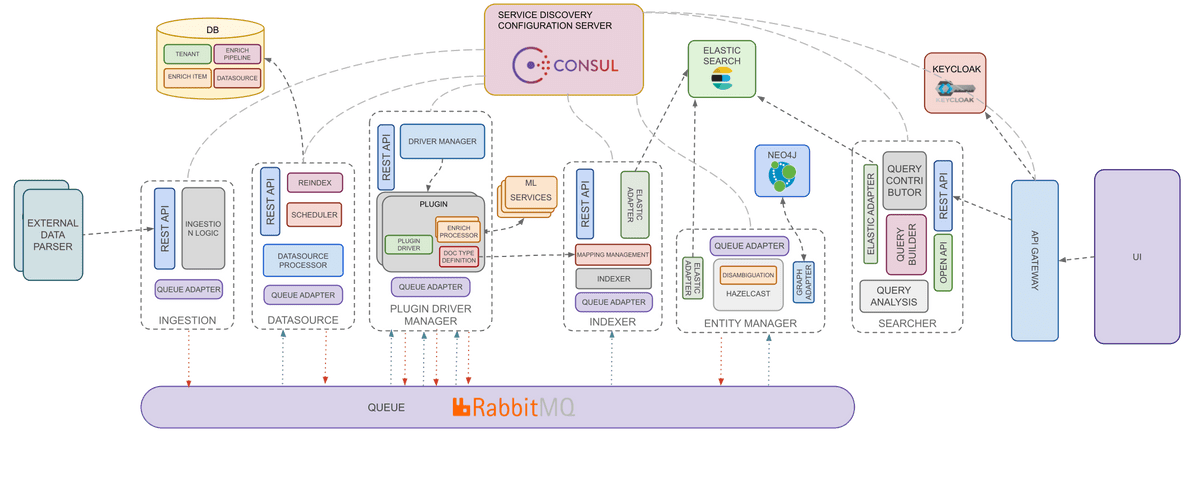

Data ingestion

Through a specific ingestion interface, the data are made to flow into the system which then, through a communication system asynchronous queue-based, manages the flow up to indexing. The choice to manage communication in a totally asynchronous way is dictated by the need to manage back pressure efficiently resulting from the ingestion of massive data.

Enrichment and indexing

The data is subsequently merged through a phase where it is subject to an enrichment activity defined according to needs. Each step of the enrichment pipeline extracts and binds new information to the data in a structured way. At the end of the enrichment phase, the enriched data is indexed in Elasticsearch and can now be searched.

The research can take place on fields originating from the original data and exploiting the information extracted and added during the enrichment phase.

Wanting to give an example, let's assume we are dealing with a data source of business documents deriving from a portal. Openk9 connects to this data source and ingests the entire document base periodically internally.

Each document goes through an enrichment pipeline consisting of two phases:

- Entity recognition

- Classification with respect to the type of document

The presence of this additional information allows the user to make more precise and advanced searches such as the following.

In this the objective is to search only and exclusively the contents cataloged as Business Plan and related to the Acme Inc organization. The result is a search made of semantic filters and not simply a full text search. Resulting in greater precision in the results.

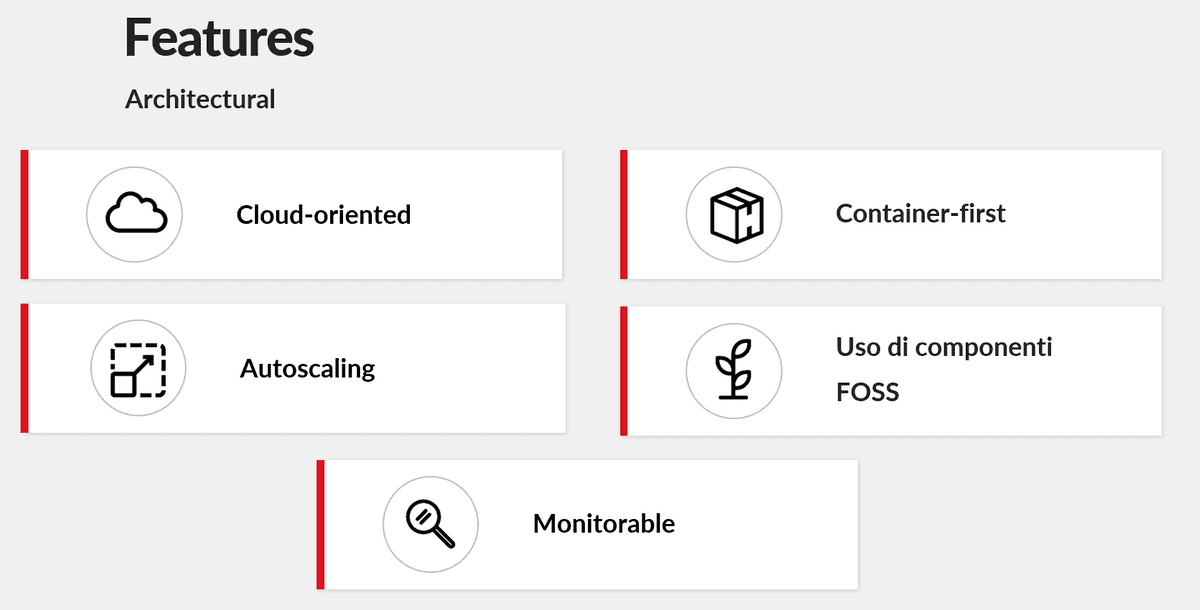

Architectural features

Appropriate architectural choices were made in the creation of Openk9, which make it a product with certain characteristics.

Among the different characteristics, the most important one is that of scalability. A cognitive search engine must be in able to scale easily, to efficiently manage the different loads to which this can be subjected over time through ingestion of data.

The quality of scalability is given above all by the microservices architecture on which Openk9 is based, and by the totally asynchronous communication that exists between the different services of the system.

On the Openk9 website there is an in-depth architectural documentation with details on the different components that make up the system.

To learn more about the architectural aspects, consult the specific documentation on the Openk9 website.

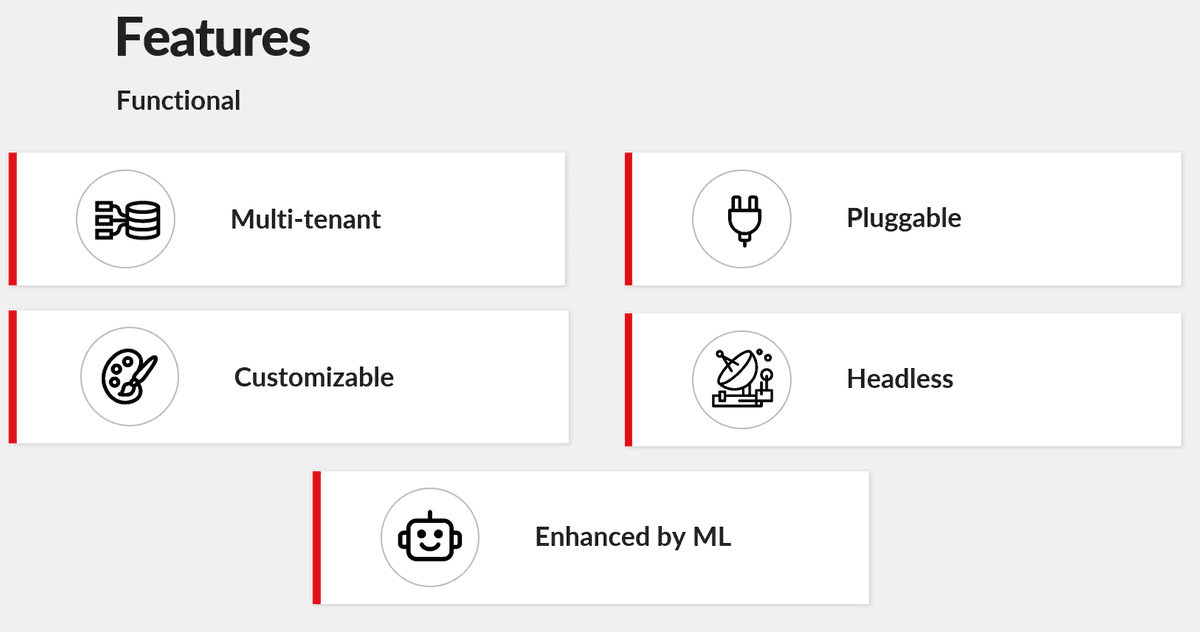

Functional Features

Openk9 also implements precise functional features, which make it a system with a strong

Pluggability and customization are in particular two fundamental aspects that characterize Openk9. By defining specific plugins, it is possible to easily extend the functional capabilities of Openk9. An appropriate plugin can be defined for each new data source, with the aim of defining aspects such as:

- the connection to the external data source

- the form of the indexed data

- the enrichment activity to be carried out on the data

- the presentation of the frontend data

In this way it is possible to add in a totally pluggable way new data sources, which will be managed and treated by the system according to the customized logics defined by the plugin.

To learn more about how to create a new plugin for Openk9 visit the appropriate section of the site.

Other relevant features

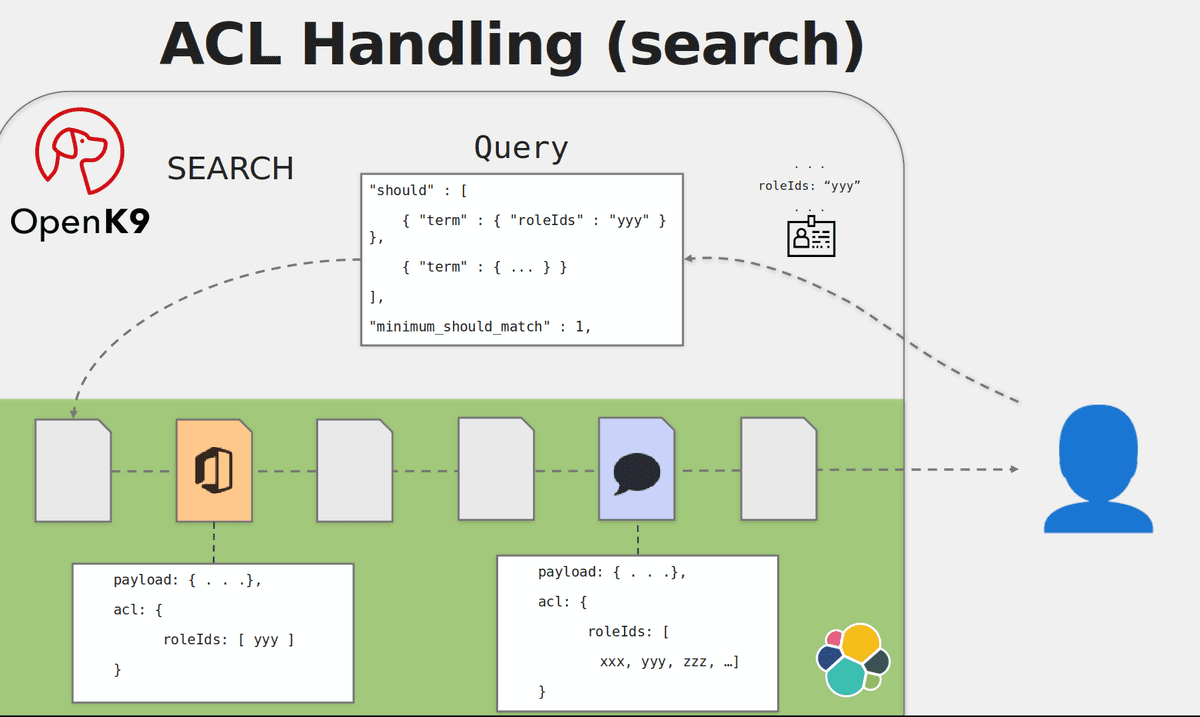

ACL management(access-control list)

A cognitive search engine like Openk9 centralizes the contents of an entire organization or several organizations under a single database.

Organization-wide confinement is usually managed by differentiating data on separate indexes by tenant. Hence any organization will be able to access only and exclusively data related to his tenant.

But how is access to by users belonging to the same organization managed? Theoretically on the same index they could be present content visible to one user but not visible to another.

Openk9 also here manages the access control logics in a pluggable way; this is because different data sources can have logic different.

In the case of a Liferay portal, the management is of the Role-based type, in the case of an Imap server, on the other hand, the logics are linked to senders, recipients, etc ...

For this reason, for each data source, it is possible to define these logics in the relative plugin.

According to the appropriate logic, Openk9 indexes not only the content or metadata, but also the information for access management. This information is then used to apply access control directly to search time, thus allowing management very efficient and guaranteeing very fast search times.

Headless Search API

Openk9 offers headless api for content research and for all related activities, such as auto-completion and query understanding.

For more information, explore the [API] documentation (https://www.openk9.io/docs/api/searcher-api).

The natural headless of the bees allows the possibility to interface to Openk9 in a simple way with multiple interface tools, such as standalone frontend, web portals, chatbots rather than voice assistants.

Search Api are also designed to be extended and customized, in order to refine and refine search options based on context and to the domain.

New search functions can be added to apply new search logic. Understanding capabilities can also be extended of the query by defining new rules and tools for understanding.

Conclusion

Openk9 is a modern and powerful search system. Through its characteristics it aims to achieve level performance in terms of all the needs within organizations nowadays. The use of artificial intelligence techniques for the management of a whole series of aspects is in line with the logic of an increasingly precise and powerful. Furthermore, Openk9 wants to differentiate itself from its competitors. It does so mainly thanks to the aforementioned ability to be easily extendable etc. customizable. Fundamental properties in order to satisfy and solve the great heterogeneity of needs dictated by the real world.

So what are you waiting for ?! On the Openk9 website you can find an in-depth guide on how to install and run Openk9 on your machine. Find both the documentation to do everything through Docker Compose, and the documentation for a more robust installation on Kubernetes clusters; all followed by documentation for the configuration of the main aspects of the product.

Furthermore, to try Openk9 directly, use the online demo environment and test the search functions directly.